Jan 19, 2018

TechnologySteps

Startup the container and enter it:

# sudo docker create --name oraclexe11g01 -p 49160:22 -p 49161:1521 wnameless/oracle-xe-11g

# sudo docker start oraclexe11g01

# su - oracle

In Container, do following:

oracle@c7edb0017fa8:~$ scp xxxxx@172.17.0.1:/media/sda5/fresh_install.tar.gz .

xxxxx@172.17.0.1's password:

fresh_install.tar.gz 100% 135KB 135.2KB/s 00:00

oracle@c7edb0017fa8:~$ tar xzvf fresh_install.tar.gz

oracle@c7edb0017fa8:~$ cd fresh_install

oracle@c7edb0017fa8:~/fresh_install$ ls

1_create_user_and_tablespace.sql 2_xxxxxxxx_ddl.sql 3_xxxxxxxx_data.sql 4_xxxxxx.sql

Now create user and tablespaces:

oracle@c7edb0017fa8:~/fresh_install$ export NLS_LANG="SIMPLIFIED CHINESE_CHINA.AL32UTF8"

oracle@c7edb0017fa8:~/fresh_install$ cd $ORACLE_HOME/bin

oracle@c7edb0017fa8:~/product/11.2.0/xe/bin$ ./sqlplus / as sysdba

SQL*Plus: Release 11.2.0.2.0 Production on 星期五 1月 19 07:53:32 2018

Copyright (c) 1982, 2011, Oracle. All rights reserved.

连接到:

Oracle Database 11g Express Edition Release 11.2.0.2.0 - 64bit Production

SQL> @/u01/app/oracle/fresh_install/1_create_user_and_tablespace.sql

SP2-0734: 未知的命令开头 "--查询表..." - 忽略了剩余的行。

表空间已创建。

用户已创建。

授权成功。

SQL> exit

从 Oracle Database 11g Express Edition Release 11.2.0.2.0 - 64bit Production 断开

Now create your items via:

oracle@c7edb0017fa8:~/product/11.2.0/xe/bin$ ./sqlplus xxxxxxxx/xxxxxxxx

SQL*Plus: Release 11.2.0.2.0 Production on 星期五 1月 19 08:02:13 2018

Copyright (c) 1982, 2011, Oracle. All rights reserved.

连接到:

Oracle Database 11g Express Edition Release 11.2.0.2.0 - 64bit Production

SQL> @/u01/app/oracle/fresh_install/2_xxxxxxxx_ddl.sql

提交完成。

SQL> @/u01/app/oracle/fresh_install/3_xxxxxxxx_data.sql

SQL> @/u01/app/oracle/fresh_install/4_goegouwoguwog.sql

SQL> exit

从 Oracle Database 11g Express Edition Release 11.2.0.2.0 - 64bit Production 断开

oracle@c7edb0017fa8:~/product/11.2.0/xe/bin$ exit

logout

root@c7edb0017fa8:~/fresh_install# exit

logout

Connection to localhost closed.

Save the docker image via:

$ sudo docker commit c7edb0017fa8 mxxxxxxx

$ sudo docker save mxxxxxxx>mxxxxx.tar

OK, you got a initialized oracledb images.

Jan 19, 2018

Technology在离线环境下,如何将在线的charts仓库的内容本地化?

举google的charts里的gitlab为例说明, 原网址在:

https://github.com/kubernetes/charts

gitlab-ce的项在:

https://github.com/kubernetes/charts/tree/master/stable/gitlab-ce

下载仓库的定义文件:

# wget https://kubernetes-charts.storage.googleapis.com/index.yaml

index.yaml文件大小为900多K,里面包含了google提供的k8s-charts仓库里的所有条目,既然我们需要的是gitlab-ce条目,那我们删除掉其他条目:

apiVersion: v1

entries:

gitlab-ce:

- created: 2017-11-14T00:04:13.262486613Z

......

version: 0.1.0

generated: 2018-01-18T18:49:08.04868721Z

查看该文件,发现我们需要的tgz文件及icon文件,手动下载之:

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.2.1.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.2.0.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.12.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.11.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.10.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.9.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.8.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.7.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.6.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.5.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.4.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.3.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.2.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.1.tgz

# wget https://kubernetes-charts.storage.googleapis.com/gitlab-ce-0.1.0.tgz

# wget https://gitlab.com/uploads/group/avatar/6543/gitlab-logo-square.png

而后手动解压缩开所有tgz文件,下载对应的镜像,并下载为离线文件,安装到工作节点上.

TODO: 这里应该有改造,需要有自己的本地仓库,推送到本地仓库,并对应更改镜像名为本地仓库。

接着,替换出index.yaml为本地的位于web server上的tgz文件和icon文件即可。

Jan 18, 2018

TechnologyChange the tgz files

Via following scripts:

for i in `ls *.tgz`

do

echo $i

tar xzvf $i

mv $i $i.bak

cd wordpress

sed -i 's|serviceType: LoadBalancer|serviceType: ClusterIP|g' values.yaml

cd ..

helm package wordpress

rm -rf wordpress

done

Then copy all of the helmed tgz file to a new place.

Get sha256sum value

Get the sha256sum,

sha256sum * | tac | awk {'print $1'}>sha256sum.txt

manually adjust its sequencees. Then form a file like following:

digest: 6dbf2c9394e216e691116ea5135cd5c83b55ce1bd199f23ab40ec6a8c426a7ea

digest: a017ad98dc9f727ea6c422be74f077557b8af35470033b61a7c7c11d6b2a1194

digest: fad50a4f5dcae6ce8af05634cb14fadb19b2018fe30521669d0e19a68192d2ec

digest: ae45690c85c733bd66db97cd859c40174c88932ff8e944852afe762dc62c47d0

digest: 5528dfda0b68cf7f00eecab4b56933e6935a01d680989f6e690a281492efce64

digest: 071f4af604b580c13ec1b53bf5ebf898d3d198bbecd616d309d71d94d7635b25

digest: 12de12908f3c8b61a1f68ea529a06ad402903bd4dea458cf335bf8e9dda3f290

.......

Get the index.yaml digest

Via following command:

# cat index.yaml | grep digest>kkkk.txt

# cat kkkk.txt| more

digest: b33a1646e90963a387406f4857f312476b4615222a36e354f7b9e62a2971ce9e

digest: d54b28981ce97485a59d78acd43182e6d275efd451245c3420129d3cf2b1bfb5

digest: 88337f823951e048118e44dc7b7e1e6508dcac138612edff13bc63a3ec90a997

digest: 07f2323bc6559c973bd4f8deb3b5c83acb99c5aac93d2135b4f1690bf9a3be85

digest: 26d71a30ee0f845ff45854d96a69f18ded56038c473f13dcc43a572a7e9cd92d

Replace all of the digest

Python scripts for doing this thing:

findlines = open('originsha256sum.txt').read().split('\n')

replacelines = open('modifiedsha256sum.txt').read().split('\n')

find_replace = dict(zip(findlines, replacelines))

with open('index.yaml') as data:

with open('newindex.yaml', 'w') as new_data:

for line in data:

for key in find_replace:

if key in line:

line = line.replace(key, find_replace[key])

new_data.write(line)

new_data.close()

data.close()

Using python2 replace.py you could get a new newindex.yaml, use this one

for replacing your original index.yaml in your web-server, and also replace

all of the charts file with your generated tgz files.

Now deploy the apps, you will get an ClusterIP equited rather than load

balance equipped apps.

Jan 18, 2018

Technology目的

搭建monocular的时候,从其mongodb的charts变量定义文件values.yaml中,可以看到其对persistence的支持,即对持久化存储的支持:

## Enable persistence using Persistent Volume Claims

## ref: http://kubernetes.io/docs/user-guide/persistent-volumes/

##

persistence:

enabled: true

## mongodb data Persistent Volume Storage Class

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

# storageClass: "-"

accessMode: ReadWriteOnce

size: 8Gi

这个charts在下发的时候,请求了一块大小为8G的持久化存储空间,用于存放mongodb的数据。注释中也列出了支持持久化存储的一些解决方案,例如AWS,

GKE, AWS和OpenStack。

问题

通过helm安装这个包的时候,在minikube上安装成功,在自己搭建的环境上,却只能通过如下命令安装成功:

# helm install --name=tiger --set "persistence.enabled=false,mongodb.persistence.enabled=false,pullPolicy=IfNotPresent,api.image.pullPolicy=IfNotPresent,ui.image.pullPolicy=IfNotPresent,prerender.image.pullPolicy=IfNotPresent" monocular/monocular

上面的命令禁用了mongodb的持久化存储选项,并设置了镜像的拉取规则。禁用持久化存储选项后,容器的存储空间在容器生命周期结束以后是无法保持的。为什么在minikube中可以部署的在自己的环境中却不能直接部署呢?

调研

minikube

minikube使用了自己的hostpath实现,可以通过dashboard查看到,minikube默认的sc实际上是映射到/mnt的某个目录下。

自己的k8s环境

首先在dashboard中我们并没有发现对应的条目,pv及pvc也没有创建出来。

[root@DashSSD dash]# kubectl get pvc

No resources found.

[root@DashSSD dash]# kubectl get pv

No resources found.

[root@DashSSD dash]# kubectl get storageclass

No resources found.

实现hostpath

在社区里找到的方案,链接如下:

https://github.com/nailgun/k8s-hostpath-provisioner

离线情况下安装流程:

# git clone https://github.com/nailgun/k8s-hostpath-provisioner

# sudo docker pull gcr.io/google_containers/busybox:1.24 && sudo docker save gcr.io/google_containers/busybox:1.24>busybox.tar

# sudo docker pull nailgun/k8s-hostpath-provisioner:latest && sudo docker save nailgun/k8s-hostpath-provisioner:latest>k8shostpath.tar

将tar文件和目录传送到离线环境,首先安装镜像。

# docker load<k8shostpath.tar && docker load<busybox.tar

8f9199423bb0: Loading layer [==================================================>] 41.73 MB/41.73 MB

Loaded image: nailgun/k8s-hostpath-provisioner:latest

2c84284818d1: Loading layer [==================================================>] 1.312 MB/1.312 MB

5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB

Loaded image: gcr.io/google_containers/busybox:1.24

默认的example目录下的yaml定义文件我们需要做点修改,才能确保部署正确,首先修改ds.yaml,这个文件主要用于定义DaemonSet,

我们需要对里面的image拉取原则进行定义:

containers:

- name: hostpath-provisioner

image: nailgun/k8s-hostpath-provisioner:latest

+++ imagePullPolicy: IfNotPresent

接着修改sc.yaml,这里定义的是StorageClass, 设置为默认:

metadata:

name: local-ssd

+++ annotations:

+++ storageclass.kubernetes.io/is-default-class: "true"

创建本地存储目录,并定义为默认的storage class:

# kubectl create -f sa.yaml -f cluster-roles.yaml -f cluster-role-bindings.yaml -f ds.yaml

# kubectl get ds -n kube-system | grep hostpath

hostpath-provisioner 0 0 0 0 0 nailgun.name/hostpath=enabled 16s

定义节点ubuntu为可以部署hostPath volumes的节点, 并检查label是否被设置成功:

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

ubuntu Ready master 7d v1.9.0

# kubectl label node ubuntu nailgun.name/hostpath=enabled

node "ubuntu" labeled

# kubectl get nodes ubuntu --show-labels

NAME STATUS ROLES AGE VERSION LABELS

ubuntu Ready master 7d v1.9.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kismatic/cni-provider=calico,kismatic/kube-proxy=true,kubernetes.io/hostname=ubuntu,nailgun.name/hostpath=enabled,node-role.kubernetes.io/master=

在节点上注明可用的存储:

# kubectl annotate node ubuntu hostpath.nailgun.name/ssd=/data

node "ubuntu" annotated

接着创建sc(StorageClass), 并检查之:

# kubectl create -f sc.yaml

storageclass "local-ssd" created

# kubectl get sc

NAME PROVISIONER AGE

local-ssd (default) nailgun.name/hostpath 10s

如果想测试pvc/pv,则:

# kubectl create -f pvc.yaml -f test-pod.yaml

结果可以通过登录节点机器ubuntu来查看:

root@ubuntu:/data# ls

pvc-409fc19d-fbf3-11e7-b46e-525400e39849

root@ubuntu:/data# ls

pvc-409fc19d-fbf3-11e7-b46e-525400e39849

root@ubuntu:/data# cat pvc-409fc19d-fbf3-11e7-b46e-525400e39849/test

hello

pod周期完毕后,pv/pvc的定义如下:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

local-ssd Bound pvc-409fc19d-fbf3-11e7-b46e-525400e39849 1Mi RWX local-ssd 2m

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-409fc19d-fbf3-11e7-b46e-525400e39849 1Mi RWX Delete Bound default/local-ssd local-ssd 3m

重启之后查看pv/pvc/sc,均保留在磁盘上。

Jan 16, 2018

TechnologyPreparation

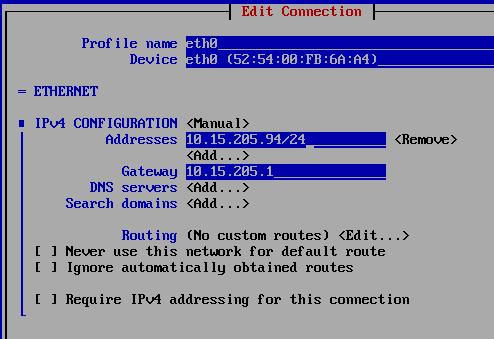

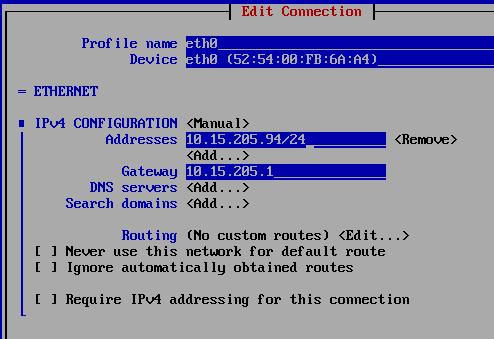

Define ip address/gateway:

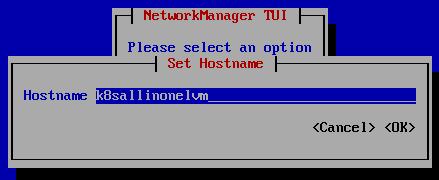

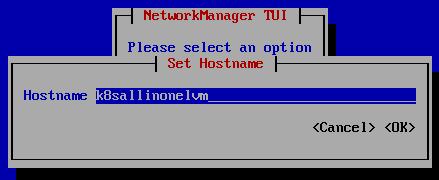

Set hostname:

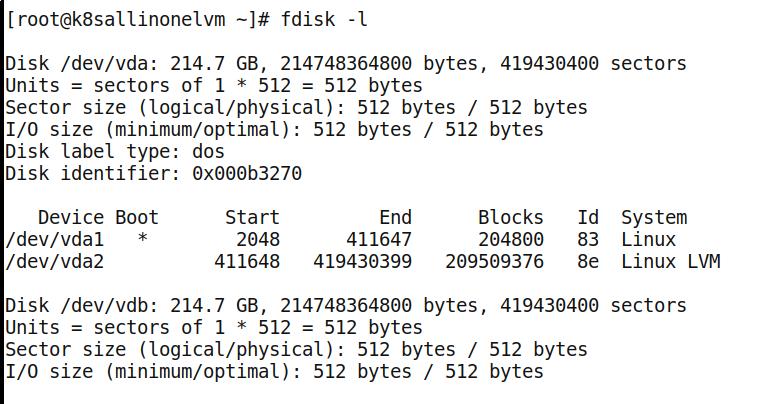

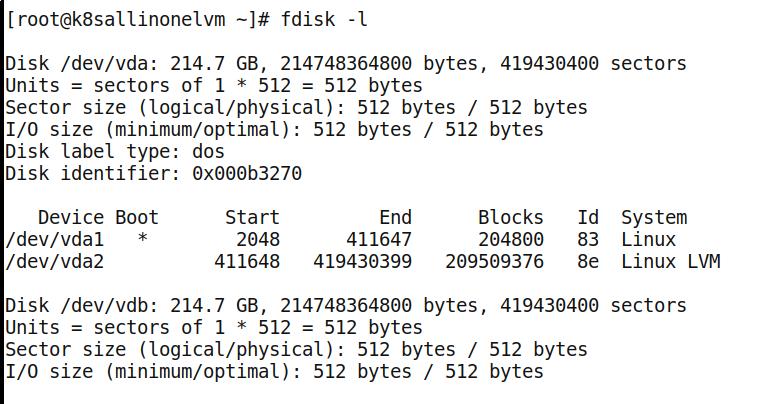

Disk Preparation:

Configuration

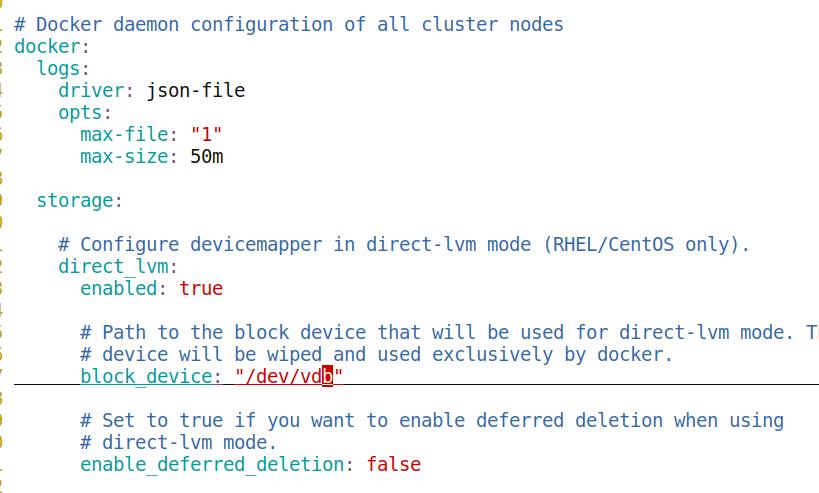

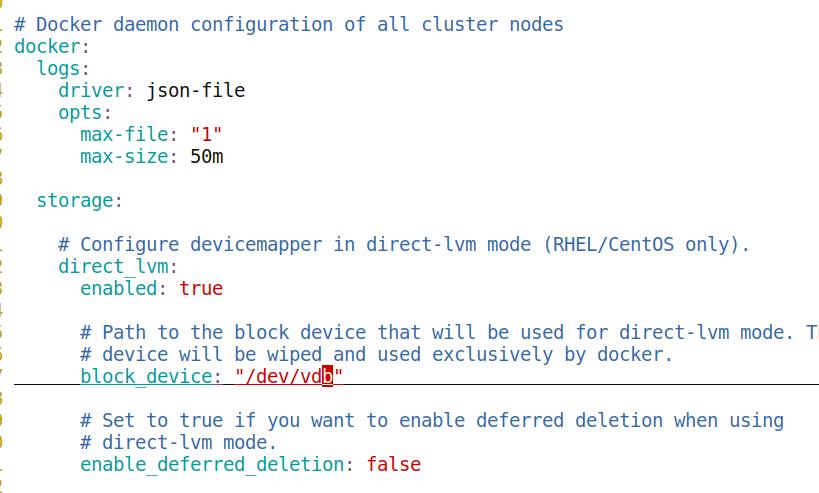

In kismatic, configure like following:

Then install kismatic as usual.

helm

When in offline-mode, helm repo update will failed.

Aim: install monocular in cluster.