Feb 19, 2019

Technology早先在2018年做了一个离线版本的playwithk8s, 主要参考了:

https://labs.play-with-k8s.com/

以及

https://training.play-with-kubernetes.com/kubernetes-workshop/

当时还写了一系列教程并形成了一个离线部署的ISO。那个ISO前几天有同事用,反映跑不起来。看了下问题,总结如下.

dns问题

安装完的系统中,dnsmasq不能使用,需要通过以下步骤来修正:

# vim /etc/systemd/resolved.conf

DNSStubListener=no

# systemctl disable systemd-resolved.service

# systemctl stop systemd-resolved.service

# echo nameserver 192.168.0.15>/etc/resolv.conf

# apt-get install -y dnsmasq

# systemctl enable dnsmasq

# vim /etc/dnsmasq.conf

address=/192.168.122.151/192.168.122.151

address=/localhost/127.0.0.1

# chattr +i /etc/resolv.conf

# chattr -e /etc/resolv.conf

# ufw disable

# docker swarm leave

# docker swarm init

这样下来可以访问到界面,

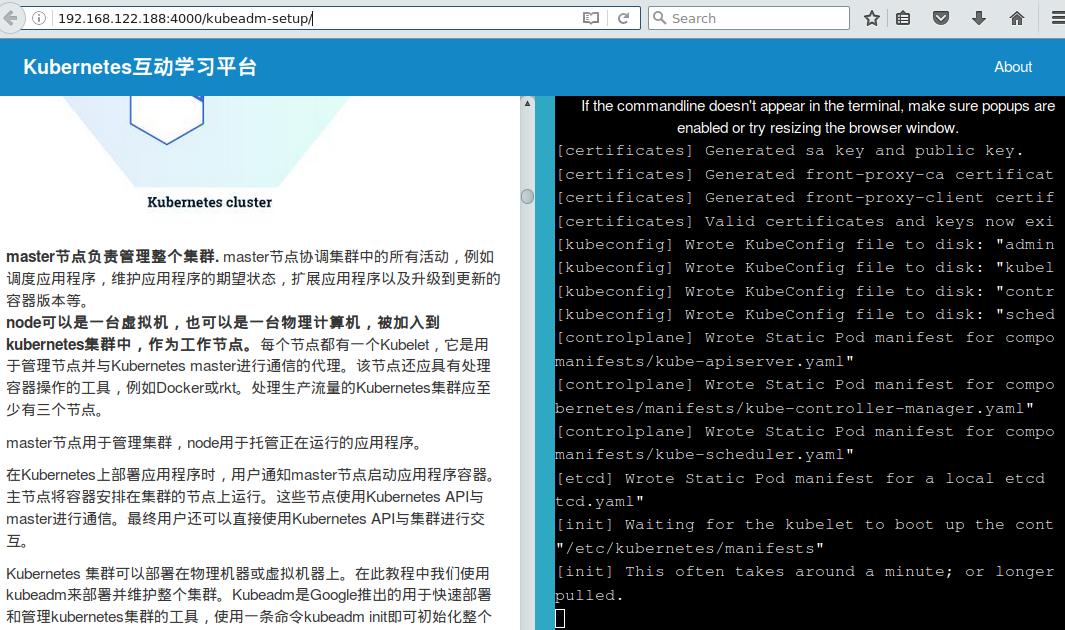

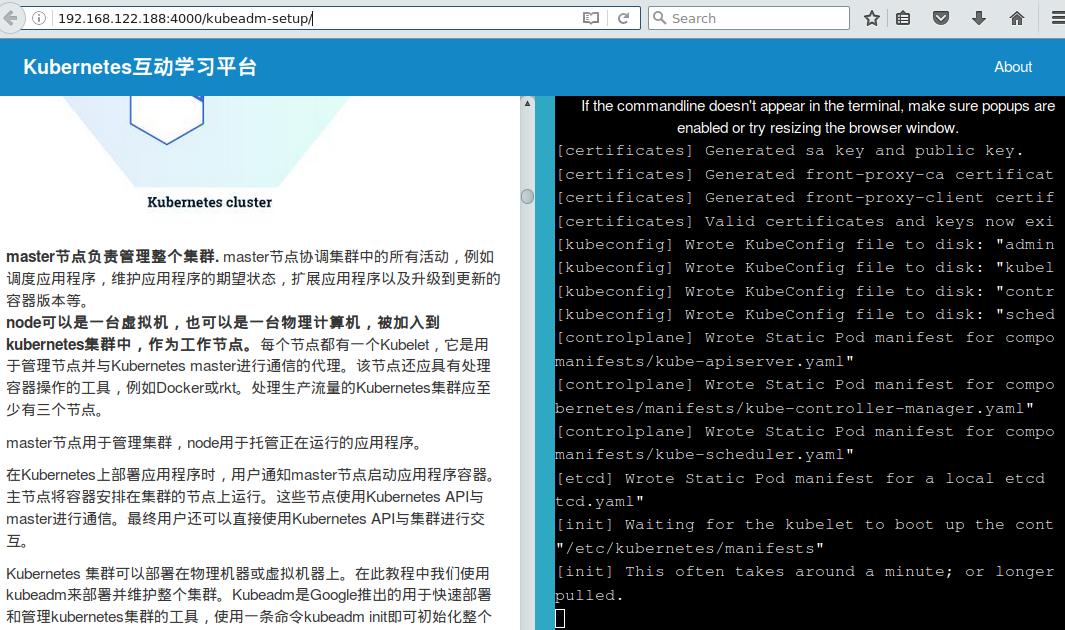

kubeadm卡顿

卡在init的时候,现象见上图。

问题分析,在docker版本为17.12.1-ce的系统上可正常运行。

ISO安装出来的docker版本为18.06.0-ce.

是否是docker版本与kubeadm不兼容?

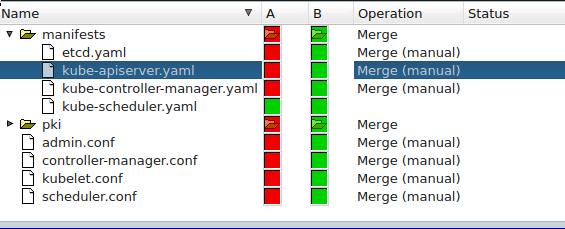

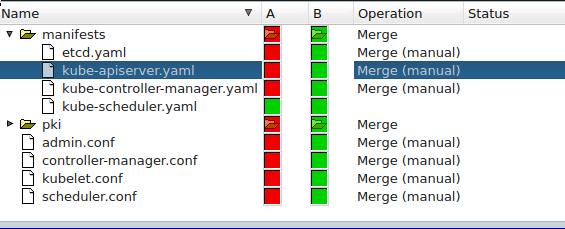

kubeadm生成的文件(/etc/kubernetes):

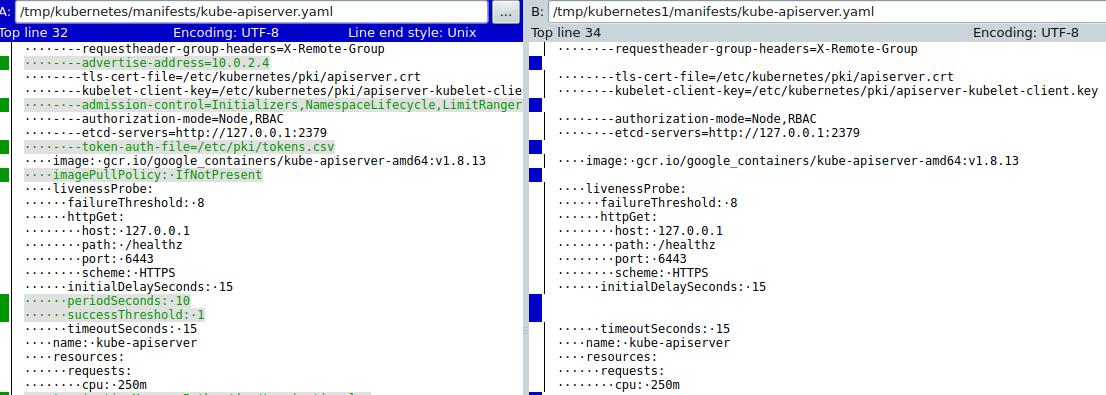

对比kube-apiserver.yaml, 发现imagePullPolicy的不同:

这点也是很让人奇怪的,为什么同样的容器镜像版本,

franela/k8s:latest会有如此的不同呢?

一样的容器镜像派生出来的实例,应该说其内置的kubeadm生成的yaml编排文件是一样的,但是在我们的系统上,更新版本的docker下生成的yaml文件未带IfNotPresent的选项,导致kubeadm认为镜像不存在,报了超时错误。

解决途径

最近没有时间来做这个事情,所以只能先写下自己的思路。

用一个registry缓存所有的包(去掉docker

load的环节),直接从cache里取回gcr.io的包。docker在启动的时候从cache里取东西回来。但是我不是很确定gcr.io是否可以像registry-1.docker.io一样被缓存下来。

伪造gcr.io的签名,指向内部的registry仓库。这个可以仿照我的kubespray框架中的做法,骗过dind。

离线情况下玩docker,真是没事找事,一波三折啊!!!

更新

最近没心思做别的事情,还是把这个PWK的离线给做了,记录一下步骤:

更新到新的play-with-kubernetes:

# git clone https://github.com/play-with-docker/play-with-kubernetes.github.io

当然在这里我们要做适配,以允许其离线化 。

采用的franela/k8s版本大约是2018年年底的版本,

# franela/k8s latest c7038cbdbc5d 2 months ago 733MB

因为这个容器镜像中的kubeadm版本已经升级到比较新的版本,需要重新下载镜像:

k8s.gcr.io/kube-proxy-amd64 v1.11.7 e9a1134ab5aa 4 weeks ago 98.1MB

k8s.gcr.io/kube-apiserver-amd64 v1.11.7 d82b2643a56a 4 weeks ago 187MB

k8s.gcr.io/kube-controller-manager-amd64 v1.11.7 93fb4304c50c 4 weeks ago 155MB

k8s.gcr.io/kube-scheduler-amd64 v1.11.7 52ea1e0a3e60 4 weeks ago 56.9MB

weaveworks/weave-npc 2.5.1 789b7f496034 4 weeks ago 49.6MB

weaveworks/weave-kube 2.5.1 1f394ae9e226 4 weeks ago 148MB

k8s.gcr.io/coredns 1.1.3 b3b94275d97c 9 months ago 45.6MB

k8s.gcr.io/etcd-amd64 3.2.18 b8df3b177be2 10 months ago 219MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 14 months ago 742kB

nginx latest 05a60462f8ba 2 years ago 181MB

同时需要更改kubeadm init的参数为:

# kubeadm init --apiserver-advertise-address $(hostname -i) --kubernetes-version=v1.11.7

在多节点章节中,kubeaadm

1.11.7与原先的calico3.1冲突,因而我们更新到了更新的3.5版本,

因为docker-in-docker配备了两个网络,我们在calico.yaml中也需要指定IP范围,以确保BGP隧道建立在正确的网络接口上:

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"

- name: IP_AUTODETECTION_METHOD

value: "interface=eth0.*"

到此,则新版本的playwithk8s更新完毕,总共花了5天时间。虽然有点磨人,但想起来这5天还是值得的。

Feb 19, 2019

Technology记录一下昨天遇到的一个问题:

同事的虚拟机(Ubuntu)自检失败,fsck总是不过,直接抛到initramfs的界面。

试图探索:

启动的时候按Shift键,到grub界面,以recovery模式启动,依然失败。看来是ext4的磁盘inode有错误。

解决方案:

用livecd启动系统,启动以后到系统里去fsck修复该文件系统。

gparted启动失败。

后面我们用的是一个CentOS7的livecd系统进入的系统,

# fsck -y /dev/mapper/lv_lv_root

扫描并自动修复(yes)磁盘上的inode错误。修复以后,用硬盘启动系统,可正常进入系统。避免了重新安装操作系统的开销。

Jan 31, 2019

TechnologyAIM

Since kubespray has released v2.8.2, I write this article for recording steps

of upgrding from v2.8.1 to v2.8.2 of my offline solution.

Prerequisites

Download the v2.8.2 release from:

https://github.com/kubernetes-sigs/kubespray/releases

# wget https://github.com/kubernetes-sigs/kubespray/archive/v2.8.2.tar.gz

# tar xzvf v2.8.2.tar.gz

# cd kubespray-2.8.2

Modification

1. cluster.yml

three changes made.

a. added kube-deploy role.

b. disable instal container-engine(cause we will directly install docker).

c. create role for k8s dashboard.

- { role: bastion-ssh-config, tags: ["localhost", "bastion"]}

# Notice 1, we combine deploy.yml here.

- hosts: kube-deploy

gather_facts: false

roles:

- kube-deploy

# Notice 1 ends here.

- hosts: k8s-cluster:etcd:calico-rr:!kube-deploy

any_errors_fatal: "{{ any_errors_fatal | default(true) }}"

.......

- { role: kubernetes/preinstall, tags: preinstall }

#- { role: "container-engine", tags: "container-engine", when: deploy_container_engine|default(true) }

- { role: download, tags: download, when: "not skip_downloads" }

.......

- { role: kubernetes-apps, tags: apps }

- hosts: kube-master[0]

tasks:

- name: "Create clusterrolebinding"

shell: kubectl create clusterrolebinding cluster-admin-fordashboard --clusterrole=cluster-admin --user=system:serviceaccount:kube-system:kubernetes-dashboard

2. Vagrantfile

10 Changes made.

a. Added customized vagrant box(vagrant-libvirt).

b. Added kube-deploy instance, which will automatically added into vagrantfile.

c. Added disks, we will use glusterfs.

d. We specify the system, and enable calico instead of flannel.

e. Added dns.sh for customized the offline environment dns.

f. Added vagrant-libvirt items.

g. Disable sync folder.

h. Call dns.sh when every vm startup.

i. Disable some download items, and specify the ansible python interpreter.

j. Let ansible become root, and disable ask-pass prompt, also add kube-deploy into vagrant generated inventory file.

"opensuse-tumbleweed" => {box: "opensuse/openSUSE-Tumbleweed-x86_64", user: "vagrant"},

"wukong" => {box: "kubespray", user: "vagrant"},

"wukong1804" => {box: "rong1804", user: "vagrant"},

}

......

$kube_master_instances = $num_instances == 1 ? $num_instances : ($num_instances - 1)

$kube_deploy_instances = 1

# All nodes are kube nodes

......

$kube_node_instances_with_disks = true

$kube_node_instances_with_disks_size = "40G"

......

$os = "wukong"

$network_plugin = "calico"

......

FileUtils.ln_s($inventory, File.join($vagrant_ansible,"inventory"))

end

end

File.open('./dns.sh' ,'w') do |f|

f.write "#!/bin/bash\n"

f.write "sed -i '/^#VAGRANT-END/i dns-nameservers 10.148.129.101' /etc/network/interfaces\n"

f.write "systemctl restart networking.service\n"

#f.write "rm -f /etc/resolv.conf\n"

#f.write "ln -s /run/systemd/resolve/resolv.conf /etc/resolv.conf\n"

#f.write "echo ' nameservers:'>>/etc/netplan/50-vagrant.yaml\n"

#f.write "echo ' addresses: [10.148.129.101]'>>/etc/netplan/50-vagrant.yaml\n"

#f.write "netplan apply eth1\n"

end

......

lv.default_prefix = 'rong1804node'

lv.cpu_mode = 'host-passthrough'

lv.storage_pool_name = 'default'

lv.disk_bus = 'virtio'

lv.nic_model_type = 'virtio'

lv.volume_cache = 'writeback'

# Fix kernel panic on fedora 28

......

node.vm.synced_folder ".", "/vagrant", disabled: true, type: "rsync", rsync__args: ['--verbose', '--archive', '--delete', '-z'] , rsync__exclude: ['.git','venv']

$shared_folders.each do |src, dst|

......

node.vm.provision "shell", inline: "swapoff -a"

# Change the dns-nameservers

node.vm.provision :shell, path: "dns.sh"

......

#"docker_keepcache": "1",

#"download_run_once": "True",

#"download_localhost": "False",

"ansible_python_interpreter": "/usr/bin/python3"

......

ansible.become_user = "root"

ansible.limit = "all"

ansible.host_key_checking = false

#ansible.raw_arguments = ["--forks=#{$num_instances}", "--flush-cache", "--ask-become-pass"]

ansible.host_vars = host_vars

#ansible.tags = ['download']

ansible.groups = {

"kube-deploy" => ["#{$instance_name_prefix}-[1:#{$kube_deploy_instances}]"],

3. inventory folder

Remove the hosts.ini file under inventory/sample/.

Edit some content in sample folder:

inventory/sample/group_vars/k8s-cluster/k8s-cluster.yml:

kubelet_deployment_type: host

helm_deployment_type: host

helm_stable_repo_url: "http://portus.ddddd.com:5000/chartrepo/kubesprayns"

helm/metric server :

helm_enabled: true

# Metrics Server deployment

metrics_server_enabled: true

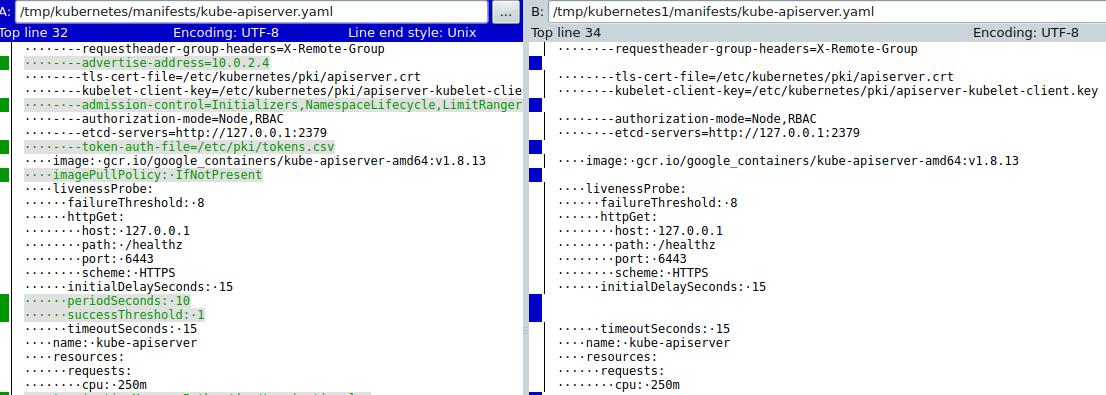

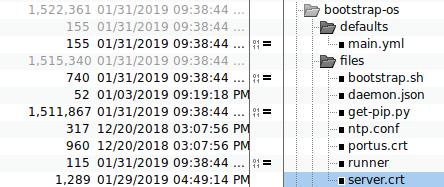

4. role bootstrap-os

- Copy

daemon.json, ntp.conf, portus.crt, server.crt to kubespray-2.8.2.

- bootstrap-ubuntu.yml changes

For disable auto-update, and configure intranet repository.

# Bug-fix1, disable auto-update for releasing apt controlling

- name: "Configure apt service status"

raw: systemctl stop apt-daily.timer;systemctl disable apt-daily.timer ; systemctl stop apt-daily-upgrade.timer ; systemctl disable apt-daily-upgrade.timer; systemctl stop apt-daily.service; systemctl mask apt-daily.service; systemctl daemon-reload

- name: "Sed configuration"

raw: sed -i 's/APT::Periodic::Update-Package-Lists "1"/APT::Periodic::Update-Package-Lists "0"/' /etc/apt/apt.conf.d/10periodic

- name: Configure intranet repository

raw: echo "deb [trusted=yes] http://portus.dddd.com:8888 ./">/etc/apt/sources.list && apt-get update -y && mkdir -p /usr/local/static

- name: List ubuntu_packages

Install more packages:

- python-pip

- ntp

- dbus

- docker-ce

- build-essential

Upload the crt files, add configuration files for docker, docker login ,etc.

- name: "upload portus.crt and server.crt files to kube-deploy"

copy:

src: "{{ item }}"

dest: /usr/local/share/ca-certificates

owner: root

group: root

mode: 0777

with_items:

- files/portus.crt

- files/server.crt

- name: "upload daemon.json files to kube-deploy"

copy:

src: files/daemon.json

dest: /etc/docker/daemon.json

owner: root

group: root

mode: 0777

- name: "update ca-certificates"

shell: update-ca-certificates

- name: ensure docker service is restarted and enabled

service:

name: "{{ item }}"

enabled: yes

state: restarted

with_items:

- docker

- name: Docker login portus.dddd.com

shell: docker login -u kubespray -p Thinker@1 portus.dddd.com:5000

- name: Docker login docker.io

shell: docker login -u kubespray -p thinker docker.io

- name: Docker login quay.io

shell: docker login -u kubespray -p thinker quay.io

- name: Docker login gcr.io

shell: docker login -u kubespray -p thinker gcr.io

- name: Docker login k8s.gcr.io

shell: docker login -u kubespray -p thinker k8s.gcr.io

- name: Docker login docker.elastic.co

shell: docker login -u kubespray -p thinker docker.elastic.co

- name: "upload ntp.conf"

copy:

src: files/ntp.conf

dest: /etc/ntp.conf

owner: root

group: root

mode: 0777

- name: "Configure ntp service"

shell: systemctl enable ntp && systemctl start ntp

- set_fact:

ansible_python_interpreter: "/usr/bin/python"

tags:

- facts

5. role download

Using local server for downloading:

# Download URLs

#kubeadm_download_url: "https://storage.googleapis.com/kubernetes-release/release/{{ kubeadm_version }}/bin/linux/{{ image_arch }}/kubeadm"

#hyperkube_download_url: "https://storage.googleapis.com/kubernetes-release/release/{{ kube_version }}/bin/linux/amd64/hyperkube"

etcd_download_url: "https://github.com/coreos/etcd/releases/download/{{ etcd_version }}/etcd-{{ etcd_version }}-linux-amd64.tar.gz"

#cni_download_url: "https://github.com/containernetworking/plugins/releases/download/{{ cni_version }}/cni-plugins-{{ image_arch }}-{{ cni_version }}.tgz"

kubeadm_download_url: "http://portus.dddd.com:8888/kubeadm"

hyperkube_download_url: "http://portus.dddd.com:8888/hyperkube"

cni_download_url: "http://portus.dddd.com:8888/cni-plugins-{{ image_arch }}-{{ cni_version }}.tgz"

Offline role

Create an offline folder under role:

# mkdir -p roles/kube-deploy

Under this folder we got following files:

# tree .

.

├── files

│ ├── 1604debs.tar.xz

│ ├── 1804debs.tar.xz

│ ├── autoindex.tar.xz

│ ├── ban_nouveau.run

│ ├── busybox1262.tar.xz

│ ├── cni-plugins-amd64-v0.6.0.tgz

│ ├── cni-plugins-amd64-v0.7.0.tgz

│ ├── compose.tar.gz

│ ├── daemon.json

│ ├── dashboard.tar.xz

│ ├── data.tar.gz

│ ├── db.docker.io

│ ├── db.elastic.co

│ ├── db.gcr.io

│ ├── db.quay.io

│ ├── db.dddd.com

│ ├── deploy.key

│ ├── deploy.key.pub

│ ├── deploy_local.repo

│ ├── dns.sh

│ ├── docker-compose

│ ├── docker-infra.service

│ ├── fileserver.tar.gz

│ ├── harbor.tar.xz

│ ├── hyperkube

│ ├── install.run

│ ├── kubeadm

│ ├── kubeadm.official

│ ├── mynginx.service

│ ├── named.conf.default-zones

│ ├── nginx19.tar.xz

│ ├── nginx.tar

│ ├── nginx.yaml

│ ├── ntp.conf

│ ├── pipcache.tar.gz

│ ├── portus.crt

│ ├── portus.tar.xz

│ ├── registry2.tar.xz

│ ├── secureregistryserver.service

│ ├── secureregistryserver.tar

│ ├── server.crt

│ └── tag_and_push.sh

└── tasks

├── deploy-centos.yml

├── deploy-ubuntu.yml

└── main.yml

2 directories, 45 files

Now using old file, for setting up a cluster, now login to kube-deploy server. do following steps:

# systemctl stop secureregistryserver

# docker ps | grep secureregistryserver

# rm -rf /usr/local/secureregistryserver/data/

# mkdir /usr/local/secureregistryserver/data

# systemctl start secureregistryserver

# docker ps | grep secureregistry

7ce648336f22 nginx:1.9 "nginx -g 'daemon of…" 3 seconds ago Up 1 second 80/tcp, 0.0.0.0:443->443/tcp secureregistryserver_nginx_1_ce6348edc346

9258c0396001 registry:2 "/entrypoint.sh /etc…" 4 seconds ago Up 2 seconds 127.0.0.1:5050->5000/tcp secureregistryserver_registry_1_76f71f58f22d

Load the kubespray images and push to secureregistryserver:

# docker load<combine.tar.xz

# docker push *****************

# docker push *****************

# docker push *****************

Load the kubeadm related images and push to secureregistryserver:

# docker load<kubeadm.tar

# docker push *****************

# docker push *****************

# docker push *****************

Now stop the secureregistryserver service and make the tar file:

# systemctl stop secureregistryserver

# cd /usr/local/

# tar cvf secureregistryserver.tar secureregistryserver

The secureregistryserver.tar should be replaced into the kube-deploy/files folder

Replace the kubeadm( we add 100 years ssl signature):

# sha256sum kubeadm

33ef160d8dd22e7ef6eb4527bea861218289636e6f1e3e54f2b19351c1048a07 kubeadm

# vim /var1/sync/kubespray282/kubespray282/kubespray-2.8.2/roles/download/defaults/main.yml

kubeadm_checksums:

v1.12.5: 33ef160d8dd22e7ef6eb4527bea861218289636e6f1e3e54f2b19351c1048a07

Replaces the hyperkube:

# wget https://storage.googleapis.com/kubernetes-release/release/v1.12.5/bin/linux/amd64/hyperkube

Replace New Items

!!!Rechecked here!!!

debs.tar.xz should be replaced(Currently we use the old ones)

secureregistryserver.tar contains all of the offline docker images, must be replaced.

kubeadm should be replaced, and checksums should be updated.

hyperkube should be replaced.

Using vps for fetching images

Under a vps, download the kubespray-2.8.2.tar.gz, and made some modifications for syncing back the images:

Create a new sync.yml file:

root@vpsserver:~/Code/kubespray-2.8.2# cat sync.yml

---

- hosts: k8s-cluster:etcd:calico-rr

any_errors_fatal: "{{ any_errors_fatal | default(true) }}"

roles:

- { role: kubespray-defaults}

- { role: sync, tags: download, when: "not skip_downloads" }

environment: "{{proxy_env}}"

Copy the sample folder under inventory to a new name sync:

root@vpsserver:~/Code/kubespray-2.8.2# cat inventory/sync/hosts.ini

# ## Configure 'ip' variable to bind kubernetes services on a

# ## different ip than the default iface

# ## We should set etcd_member_name for etcd cluster. The node that is not a etcd member do not need to set the value, or can set the empty string value.

[all]

vpsserver ansible_ssh_host=1xxx.xxx.xxx.xx ansible_user=root ansible_ssh_private_key_file="/root/.ssh/id_rsa" ansible_port=22

[kube-master]

vpsserver

[etcd]

vpsserver

[kube-node]

vpsserver

[k8s-cluster:children]

kube-master

kube-node

Copy roles/download to roles/sync, and make some changes, notice all of the downloads docker images should changes to enabled:true:

root@vpsserver:~/Code/kubespray-2.8.2/roles# ls

adduser bootstrap-os dnsmasq etcd kubernetes-apps network_plugin reset upgrade

bastion-ssh-config container-engine download kubernetes kubespray-defaults remove-node sync win_nodes

root@vpsserver:~/Code/kubespray-2.8.2/roles# cd sync/

root@vpsserver:~/Code/kubespray-2.8.2/roles/sync# ls

defaults meta tasks

root@vpsserver:~/Code/kubespray-2.8.2/roles/sync# cat defaults/main.yml

downloads:

netcheck_server:

#enabled: "{{ deploy_netchecker }}"

enabled: true

container: true

repo: "{{ netcheck_server_image_repo }}"

tag: "{{ netcheck_server_image_tag }}"

sha256: "{{ netcheck_server_digest_checksum|default(None) }}"

groups:

- k8s-cluster

netcheck_agent:

#enabled: "{{ deploy_netchecker }}"

enabled: true

container: true

repo: "{{ netcheck_agent_image_repo }}"

tag: "{{ netcheck_agent_image_tag }}"

sha256: "{{ netcheck_agent_digest_checksum|default(None) }}"

groups:

- k8s-cluster

Disalbe Sync containers tasks, so we will only download the docker images to local.

root@vpsserver:~/Code/kubespray-2.8.2/roles/sync# cat tasks/main.yml

---

- include_tasks: download_prep.yml

when:

- not skip_downloads|default(false)

- name: "Download items"

include_tasks: "download_{% if download.container %}container{% else %}file{% endif %}.yml"

vars:

download: "{{ download_defaults | combine(item.value) }}"

with_dict: "{{ downloads }}"

when:

- not skip_downloads|default(false)

- item.value.enabled

- (not (item.value.container|default(False))) or (item.value.container and download_container)

#- name: "Sync container"

# include_tasks: sync_container.yml

# vars:

# download: "{{ download_defaults | combine(item.value) }}"

# with_dict: "{{ downloads }}"

# when:

# - not skip_downloads|default(false)

# - item.value.enabled

# - item.value.container | default(false)

# - download_run_once

# - group_names | intersect(download.groups) | length

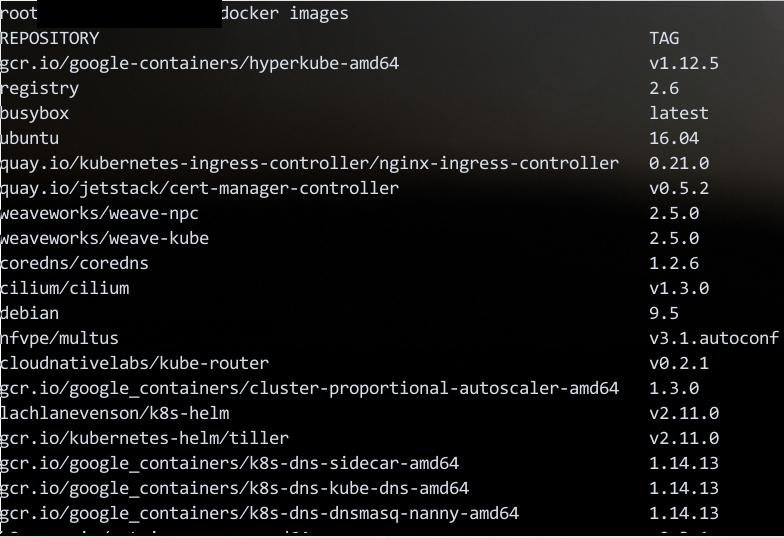

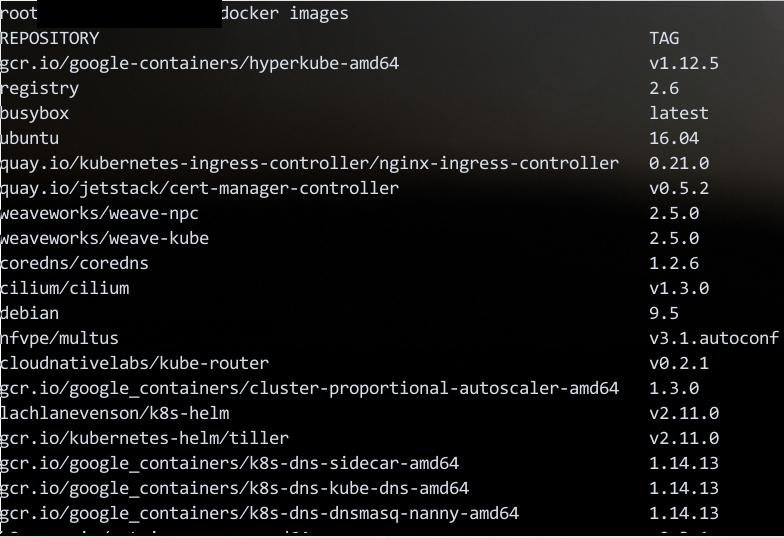

All of the kubespray pre-defined images will be downloaded to vps, check via:

Save it using scripts:

# docker save -o combine.tar gcr.io/google-containers/hyperkube-amd64:v1.12.5 registry:2.6 busybox:latest quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0 quay.io/jetstack/cert-manager-controller:v0.5.2 weaveworks/weave-npc:2.5.0 weaveworks/weave-kube:2.5.0 coredns/coredns:1.2.6 cilium/cilium:v1.3.0 nfvpe/multus:v3.1.autoconf cloudnativelabs/kube-router:v0.2.1 gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.3.0 lachlanevenson/k8s-helm:v2.11.0 gcr.io/kubernetes-helm/tiller:v2.11.0 gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.13 gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.13 gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.13 k8s.gcr.io/metrics-server-amd64:v0.3.1 quay.io/external_storage/cephfs-provisioner:v2.1.0-k8s1.11 gcr.io/google_containers/kubernetes-dashboard-amd64:v1.10.0 busybox:1.29.2 quay.io/coreos/etcd:v3.2.24 k8s.gcr.io/addon-resizer:1.8.3 quay.io/calico/node:v3.1.3 quay.io/calico/ctl:v3.1.3 quay.io/calico/kube-controllers:v3.1.3 quay.io/calico/cni:v3.1.3 nginx:1.13 quay.io/external_storage/local-volume-provisioner:v2.1.0 quay.io/calico/routereflector:v0.6.1 quay.io/coreos/flannel:v0.10.0 contiv/netplugin:1.2.1 contiv/auth_proxy:1.2.1 gcr.io/google_containers/pause-amd64:3.1 ferest/etcd-initer:latest andyshinn/dnsmasq:2.78 quay.io/coreos/flannel-cni:v0.3.0 xueshanf/install-socat:latest quay.io/l23network/k8s-netchecker-agent:v1.0 quay.io/l23network/k8s-netchecker-server:v1.0 gcr.io/google_containers/kube-registry-proxy:0.4

# xz combine.tar

Using vagrant for fetching kubeadm

Your vagrant box should cross gfw, then you use a 1604 box for create an all-in-one k8s cluster.

After provision, use following command for saving all of the images:

# docker images

# docker save -o combine.tar xxxxx xxx xxx xxx xxx xxx

Jan 29, 2019

TechnologySuppose your kubernetes clusters are in totally offline environment, you don’t

have any connection to Internet, how to deal with the docker pull request

within the cluster? Following are steps for solving this problem.

Docker-Composed Registry

This step is pretty simple, I mainly refers to:

https://www.digitalocean.com/community/tutorials/how-to-set-up-a-private-docker-registry-on-ubuntu-14-04

Following the steps in article, I got a running docker-compose triggered

docker registry server.

Following is my folder structure:

# tree .

├── data

├── docker-compose.yml

├── nginx

│ ├── openssl.cnf

│ ├── registry.conf

│ ├── registry.password

│ ├── server.crt

│ ├── server.csr

│ └── server.key

Docker-compose file is listed as:

# cat docker-compose.yml

nginx:

image: "nginx:1.9"

ports:

- 443:443

links:

- registry:registry

volumes:

- ./nginx/:/etc/nginx/conf.d:ro

registry:

image: registry:2

ports:

- 127.0.0.1:5000:5000

environment:

REGISTRY_STORAGE_FILESYSTEM_ROOTDIRECTORY: /data

volumes:

- ./data:/data

Handle SSL

The above steps will only create a single hostname ssl enabled docker registry

server, we want to enable multi-domain self-signed SSL certificate.

Create a file called openssl.cnf with the following details, notice that we

have set docker.io, gcr.io, quay.io and elastic.co.

# vim openssl.cnf

[req]

distinguished_name = req_distinguished_name

req_extensions = v3_req

[req_distinguished_name]

countryName = SL

countryName_default = SL

stateOrProvinceName = Western

stateOrProvinceName_default = Western

localityName = Colombo

localityName_default = Colombo

organizationalUnitName = ABC

organizationalUnitName_default = ABC

commonName = *.docker.io

commonName_max = 64

[ v3_req ]

# Extensions to add to a certificate request

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = *.docker.io

DNS.2 = *.gcr.io

DNS.3 = *.quay.io

DNS.4 = *.elastic.co

DNS.5 = docker.io

DNS.6 = gcr.io

DNS.7 = quay.io

DNS.8 = elastic.co

Create the Private key.

# rm -f server.key

# sudo openssl genrsa -out server.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

.....................+++++

......................................+++++

e is 65537 (0x010001)

Create Certificate Signing Request (CSR).

# rm -f server.csr

# sudo openssl req -new -out server.csr -key server.key -config openssl.cnf

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

SL [SL]:

Western [Western]:

Colombo [Colombo]:

ABC [ABC]:

*.docker.io []:

*.docker.io is from the above defined parts in openssl.cnf.

Sign the SSL Certificate.

# rm -f server.crt

# sudo openssl x509 -req -days 36500 -in server.csr -signkey server.key -out server.crt -extensions v3_req -extfile openssl.cnf

Signature ok

subject=C = SL, ST = Western, L = Colombo, OU = ABC

Getting Private key

You can check the ssl certificated content via:

# openssl x509 -in server.crt -noout -text

Certificate:

Data:

....

Validity

Not Before: Jan 29 08:30:20 2019 GMT

Not After : Jan 5 08:30:20 2119 GMT

....

X509v3 Subject Alternative Name:

DNS:*.docker.io, DNS:*.gcr.io, DNS:*.quay.io, DNS:*.elastic.co, DNS:docker.io, DNS:gcr.io, DNS:quay.io, DNS:elastic.co

nginx conf

Add the domain items into registry.conf:

upstream docker-registry {

server registry:5000;

}

server {

listen 443;

server_name docker.io gcr.io quay.io elastic.co;

# SSL

ssl on;

ssl_certificate /etc/nginx/conf.d/server.crt;

ssl_certificate_key /etc/nginx/conf.d/server.key;

dns configuration

Install bind9 on ubuntu, or install dnsmasq on centos system. take bind9’s

configuration for example, add following configurations:

# ls db*

db.docker.io db.gcr.io db.quay.io

The named.conf.default-zones is listed as following:

# cat named.conf.default-zones

// prime the server with knowledge of the root servers

zone "." {

type hint;

file "/etc/bind/db.root";

};

// be authoritative for the localhost forward and reverse zones, and for

// broadcast zones as per RFC 1912

zone "localhost" {

type master;

file "/etc/bind/db.local";

};

zone "127.in-addr.arpa" {

type master;

file "/etc/bind/db.127";

};

zone "0.in-addr.arpa" {

type master;

file "/etc/bind/db.0";

};

zone "255.in-addr.arpa" {

type master;

file "/etc/bind/db.255";

};

zone "docker.io" {

type master;

file "/etc/bind/db.docker.io";

};

zone "quay.io" {

type master;

file "/etc/bind/db.quay.io";

};

zone "gcr.io" {

type master;

file "/etc/bind/db.gcr.io";

};

zone "elastic.co" {

type master;

file "/etc/bind/db.elastic.co";

};

Take elastic.co for example, show its content:

# cat db.elastic.co

$TTL 604800

@ IN SOA elastic.co. root.localhost. (

1 ; Serial

604800 ; Refresh

86400 ; Retry

2419200 ; Expire

604800 ) ; Negative Cache TTL

;

@ IN NS localhost.

@ IN A 192.168.122.154

elastic.co IN NS 192.168.122.154

docker IN A 192.168.122.154

Using the docker registry

Point the nodes’ dns server to your registry nodes, so if you ping elastic.co,

you got reply from 192.168.122.154.

Then add the crt into your docker’s trusted sites:

# cp server.crt /usr/local/share/ca-certificates

# update-ca-certificates

# systemctl restart docker

# docker login -u dddd -p gggg docker.io

# docker login -u dddd -p gggg quay.io

# docker login -u dddd -p gggg gcr.io

# docker login -u dddd -p gggg k8s.gcr.io

Now you can freely push/pull images from offline registry server, as if you

are on Internet.

Jan 28, 2019

TechnologyUpgrade helm

Download the helm from github, and extracted to your system PATH, then:

# helm init --upgrade

Notice in your k8s cluster your tiller will be upgraded:

kube-system tiller-deploy-68b77f4c57-dwd47 0/1 ContainerCreating 0 13s

Examine the upgraded version:

# helm version

Client: &version.Version{SemVer:"v2.12.2", GitCommit:"7d2b0c73d734f6586ed222a567c5d103fed435be", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.12.2", GitCommit:"7d2b0c73d734f6586ed222a567c5d103fed435be", GitTreeState:"clean"}

Working Issue

Using stable/prometheus-operator.

Fetch the helm/charts package via:

# helm fetch stable/prometheus-operator

# ls

prometheus-operator-1.9.0.tgz

Deploy it on the minikube and you will get all of the images, then save it

via:

eval $(minikube docker-env)

docker save -o prometheus-operator.tar grafana/grafana:5.4.3 quay.io/prometheus/node-exporter:v0.17.0 quay.io/coreos/prometheus-config-reloader:v0.26.0 quay.io/coreos/prometheus-operator:v0.26.0 quay.io/prometheus/alertmanager:v0.15.3 quay.io/prometheus/prometheus:v2.5.0 kiwigrid/k8s-sidecar:0.0.6 quay.io/coreos/kube-state-metrics:v1.4.0 quay.io/coreos/configmap-reload:v0.0.1

xz prometheus-operator.tar

Then we could write the offline scripts for deploying this helm/charts.