Aug 27, 2019

TechnologySteps

The docker-compose.yml is listed as following:

version: '2.2'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:6.1.3

container_name: elasticsearch

environment:

- cluster.name=docker-cluster

- node.name=coreos-1

- node.master=true

- node.data=true

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=false

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata1:/usr/share/elasticsearch/data

- ./elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

ports:

- 192.168.122.31:9200:9200

- 192.168.122.31:9300:9300

volumes:

esdata1:

driver: local

The elasticsearch.yml file is listed as following:

network.host: 0.0.0.0

discovery.zen.ping.unicast.hosts: ["192.168.122.31"]

network.publish_host: 192.168.122.31

Thus you could use docker-compose for setting the cluster.

tips

master and worker:

for master node, node.master=true

for worker node, node.master=false

Aug 22, 2019

TechnologyAIM

livecd->coreos_install in livecd->Reboot to coreos

Steps

Install cubic on ubuntu 18.04.3:

sudo apt-add-repository ppa:cubic-wizard/release

sudo apt update

sudo apt-get install -y cubic

Download the ubuntu-18.04.3-desktop-amd64.iso, and check its md5sum:

$ md5sum ubuntu-18.04.3-desktop-amd64.iso

72491db7ef6f3cd4b085b9fe1f232345 ubuntu-18.04.3-desktop-amd64.iso

Making working directory:

Changes: I found the coreos install iso could do the same thing, ignored.

Aug 21, 2019

TechnologyPrepare

vagrant vms, install kubespray via vagrant.

Additional packages:

# apt-add-repository ppa:ansible/ansible

# sudo apt-get install -y netdata ntpdate ansible python-netaddr bind9 bind9utils ntp nfs-common nfs-kernel-server python-netaddr nethogs iotop

# sudo mkdir /root/static

# cd /var/cache

# find . | grep deb$ | xargs -I % cp % /root/stati

# cd /root/static

# dpkg-scanpackages . /dev/null | gzip -9c > Packages.gz

# cd /root/

# tar cJvf 1804debs.tar.xz static/

scp the 1804debs.tar.xz to the kube-deploy role(later).

Modification

# wget https://github.com/projectcalico/calicoctl/releases/download/v3.7.3/calicoctl-linux-amd64

# wget https://github.com/containernetworking/plugins/releases/download/v0.8.1/cni-plugins-linux-amd64-v0.8.1.tgz

Aug 19, 2019

TechnologyAIM

To deploy kubespray-deployed kubernetes offlinely.

Problems

- Operating system?

- How to run ansible?

- How to run ssl configurations?

- How to setup dns name server and pretend the docker.io/quay.io/gcr.io?

- Scale up/down the cluster?

- Netdata?

- docker-compose based systemd configuration?

Steps

1. Environments

vagrant based coreos OS.

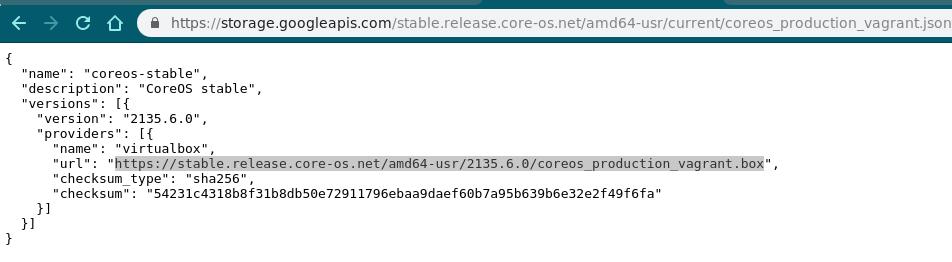

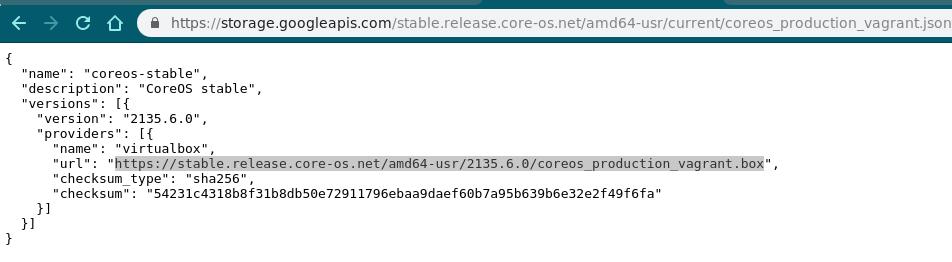

From the kubespray’s Vagrantfile, judge the coreos box file:

Then download the latest coreos vagrant box via:

# wget https://stable.release.core-os.net/amd64-usr/2135.6.0/coreos_production_vagrant.box

2. dns nameserver

Run dns nameserver in docker.

# sudo docker run --name dnsmasq -d -p 53:53/udp -p 5380:8080 -v /home/core/dnsmasq.conf:/etc/dnsmasq.conf --log-opt "max-size=100m" -e "HTTP_USER=foo" -e "HTTP_PASS=bar" --restart always jpillora/dnsmasq

# cat dnsmasq.conf

#dnsmasq config, for a complete example, see:

# http://oss.segetech.com/intra/srv/dnsmasq.conf

#log all dns queries

log-queries

#dont use hosts nameservers

no-resolv

#use cloudflare as default nameservers, prefer 1^4

server=1.0.0.1

server=1.1.1.1

strict-order

#serve all .company queries using a specific nameserver

server=/company/10.0.0.1

#explicitly define host-ip mappings

address=/myhost.company/10.0.0.2

address=/portus.xxxxx.com/10.142.18.191

address=/gcr.io/10.142.18.191

address=/k8s.gcr.io/10.142.18.191

address=/quay.io/10.142.18.191

address=/elastic.co/10.142.18.191

address=/docker.elastic.co/10.142.18.191

address=/docker.io/10.142.18.191

address=/registry-1.docker.io/10.142.18.191

Later we will configure dns for /etc/resolv.conf in coreos.

3. dns client

For configurating the client’s dns name server, do:

# cat /etc/systemd/resolved.conf

# This file is part of systemd.

#

# systemd is free software; you can redistribute it and/or modify it

# under the terms of the GNU Lesser General Public License as published by

# the Free Software Foundation; either version 2.1 of the License, or

# (at your option) any later version.

#

# Entries in this file show the compile time defaults.

# You can change settings by editing this file.

# Defaults can be restored by simply deleting this file.

#

# See resolved.conf(5) for details

[Resolve]

DNS=10.142.18.191

# sudo systemctl restart systemd-resolved

4. auto-index file server

systemd file could be run, just as usual.

5. ansible version

Using virtualenv for installing ansible 2.7:

# sudo apt install virtualenv

# virtualenv -p python3 env

# source env/bin/activate

# which pip

/media/sda/coreos_kubespray/Rong/env/bin/pip

# pip install ansible==2.7.9

# which ansible

/media/sda/coreos_kubespray/Rong/env/bin/ansible

# ansible --version

ansible 2.7.9

config file = /media/sda/coreos_kubespray/Rong/ansible.cfg

# pip install netaddr

Disks

mounting the esdata disk via:

# vim /etc/systemd/system/media-esdata.mount

[Unit]

Description=Mount cinder volume

Before=docker.service

[Mount]

What=/dev/vdb1

Where=/media/esdata

Options=defaults,noatime,noexec

[Install]

WantedBy=docker.service

# systemctl enable media-esdata.mount

Then creating the filesystem on /dev/vdb1, your mounting point will be ok.

Aug 12, 2019

TechnologyOS Preparation

Centos 7.6 OS, installed via:

CentOS-7-x86_64-Everything-1810.iso

Download the source code from:

https://gitee.com/xhua/OpenshiftOneClick

Corresponding docker images:

docker.io/redis:5

docker.io/openshift/origin-node:v3.11.0

docker.io/openshift/origin-control-plane:v3.11.0

docker.io/openshift/origin-haproxy-router:v3.11.0

docker.io/openshift/origin-deployer:v3.11.0

docker.io/openshift/origin-pod:v3.11.0

docker.io/rabbitmq:3.7-management

docker.io/mongo:4.1

docker.io/memcached:1.5

quay.io/kubevirt/kubevirt-web-ui-operator:latest

docker.io/xhuaustc/openldap-2441-centos7:latest

quay.io/kubevirt/kubevirt-web-ui:v2.0.0

docker.io/perconalab/pxc-openshift:latest

docker.io/tomcat:8.5-alpine

docker.io/centos/postgresql-95-centos7:latest

docker.io/centos/mysql-57-centos7:latest

docker.io/centos/nginx-112-centos7:latest

docker.io/curiouser/dubbo_zookeeper:v1

docker.io/xhuaustc/logstash:6.6.1

docker.io/xhuaustc/kibana:6.6.1

docker.io/xhuaustc/elasticsearch:6.6.1

docker.io/openshift/jenkins-2-centos7:latest

docker.io/openshift/origin-docker-registry:v3.11.0

docker.io/openshift/jenkins-agent-maven-35-centos7:v4.0

docker.io/openshift/origin-console:v3.11.0

docker.io/sonatype/nexus3:3.14.0

docker.io/gitlab/gitlab-ce:11.4.0-ce.0

docker.io/openshift/origin-web-console:v3.11.0

docker.io/cockpit/kubernetes:latest

docker.io/xhuaustc/apolloportal:latest

docker.io/xhuaustc/apolloconfigadmin:latest

docker.io/xhuaustc/nfs-client-provisioner:latest

docker.io/blackcater/easy-mock:1.6.0

docker.io/perconalab/proxysql-openshift:0.5

docker.io/xhuaustc/selenium:3

docker.io/xhuaustc/zalenium:3

docker.io/xhuaustc/etcd:v3.2.22

docker.io/openshiftdemos/gogs:0.11.34

docker.io/openshiftdemos/sonarqube:6.7

docker.io/xhuaustc/openshift-kafka:latest

docker.io/redis:3.2.3-alpine

docker.io/kubevirt/virt-api:v0.19.0

docker.io/kubevirt/virt-controller:v0.19.0

docker.io/kubevirt/virt-handler:v0.19.0

docker.io/kubevirt/virt-operator:v0.19.0

Servers

rpm server

ISO as a rpm server.

offline iso rpm server.

# vim files/all.repo

[openshift]

name=openshift

baseurl=http://192.192.189.1/ocrpmpkgs/

enabled=1

gpgcheck=0

[openshift1]

name=openshift1

baseurl=http://192.192.189.1:8080

enabled=1

gpgcheck=0

Simple https server

Create a new folder and generate pem files under this folder:

# openssl req -new -x509 -keyout server.pem -out server.pem -days 365 -nodes

Common name: ssl.xxxx.com

If you set ssl.xxxx.com, then you visit this website via https://ssl.xxxx.com:4443/index.html.

Write a simple python file for serving https:

# vi simple-https-server.py

import BaseHTTPServer, SimpleHTTPServer

import ssl

httpd = BaseHTTPServer.HTTPServer(('localhost', 4443), SimpleHTTPServer.SimpleHTTPRequestHandler)

httpd.socket = ssl.wrap_socket (httpd.socket, certfile='./server.pem', server_side=True)

httpd.serve_forever()

# sudo python simple-https-server.py

Server folder content:

# ls

allinone-webconsole.css apollo.png easymock.png kafka.png nexus3.png pxc.png simple-https-server.py zalenium.png

allinone-webconsole.js dubbo.png gogs.png kelk.png openldap.png server.pem sonarqube.svg

# cat allinone-webconsole.css

.icon-gogs{

background-image: url(https://ssl.xxxx.com:4443/gogs.png);

width: 50px;

height: 50px;

background-size: 100% 100%;

}

.icon-sonarqube{

background-image: url(https://ssl.xxxx.com:4443/sonarqube.svg);

width: 80px;

height: 50px;

background-size: 100% 100%;

}

Using Simple https server

Add customized domain name into /etc/hosts:

# vim /etc/hosts

192.192.189.1 ssl.xxxx.com

Add server.pem into the client system:

# yum install -y ca-certificates

# update-ca-trust force-enable

# cp server.pem /etc/pki/ca-trust/source/anchors/ssl.xxxx.com.pem

# update-ca-trust

Deployment

Two nodes, run following scripts first:

#!/bin/bash

setenforce 1

selinux=$(getenforce)

if [ "$selinux" != Enforcing ]

then

echo "Please setlinux Enforcing"

exit 10

fi

cat >/etc/sysctl.d/99-elasticsearch.conf <<EOF

vm.max_map_count = 262144

EOF

sysctl vm.max_map_count=262144

export CHANGEREPO=true

if [ $CHANGEREPO == true -a ! -d /etc/yum.repos.d/back ]

then

cd /etc/yum.repos.d/; mkdir -p back; mv -f *.repo back/; cd -

cp files/all.repo /etc/yum.repos.d/

yum clean all

fi

current_path=`pwd`

yum localinstall tools/ansible-2.6.5-1.el7.ans.noarch.rpm -y

ansible-playbook playbook.yml --skip-tags after_task

cd $current_path/openshift-ansible-playbook

ansible-playbook playbooks/prerequisites.yml

Configuration of Master node’s config.yml:

---

CHANGEREPO: true

HOSTNAME: os311.test.it.example.com

Configuration of Worker node’s config.yml:

---

CHANGEREPO: true

HOSTNAME: os312.test.it.example.com

Then add following lines into /etc/hosts:

192.192.189.128 os311.test.it.example.com

192.192.189.129 os312.test.it.example.com

192.192.189.1 ssl.xxxx.com

Then on master node, replace the /etc/ansible/hosts with our pre-defined one:

.....

openshift_web_console_extension_script_urls=["https://ssl.xxxx.com:4443/allinone-webconsole.js"]

openshift_web_console_extension_stylesheet_urls=["https://ssl.xxxx.com:4443/allinone-webconsole.css"]

......

openshift_disable_check=memory_availability,disk_availability,package_availability,package_update,docker_image_availability,docker_storage_driver,docker_storage,package_version

.......

openshift_node_groups=[{'name': 'node-config-all-in-one', 'labels': ['node-role.kubernetes.io/master=true', 'node-role.kubernetes.io/infra=true']}, {'name': 'node-config-compute', 'labels': ['node-role.kubernetes.io/compute=true']}]

.......

[masters]

os311.test.it.example.com

[etcd]

os311.test.it.example.com

[nfs]

os311.test.it.example.com

[nodes]

os311.test.it.example.com openshift_node_group_name="node-config-all-in-one"

os312.test.it.example.com openshift_node_group_name='node-config-compute'

Now run deployment:

current_path=`pwd`

cd $current_path/openshift-ansible-playbook

ansible-playbook playbooks/prerequisites.yml

ansible-playbook -vvvv playbooks/deploy_cluster.yml

oc adm policy add-cluster-role-to-user cluster-admin admin

cd $current_path

ansible-playbook playbook.yml --tags install_nfs

ansible-playbook playbook.yml --tags after_task

After deployment, check the status via:

[root@os311 OpenshiftOneClick]# oc get nodes

NAME STATUS ROLES AGE VERSION

os311.test.it.example.com Ready infra,master 3d v1.11.0+d4cacc0

os312.test.it.example.com Ready compute 3d v1.11.0+d4cacc0

kube-virt

via following steps, deploy kubevirt:

# kubectl apply -f kubevirt-operator.yaml

# kubectl apply -f kubevirt-cr.yaml

deploy ui:

# cd web-ui-operator-master

# oc new-project kubevirt-web-ui

# cd deploy

# oc apply -f service_account.yaml

# oc apply -f role.yaml

# oc apply -f role_binding.yaml

# oc create -f crds/kubevirt_v1alpha1_kwebui_crd.yaml

# oc apply -f operator.yaml

# oc apply -f deploy/crds/kubevirt_v1alpha1_kwebui_cr.yaml

DNS setting

By following steps:

# vim /etc/dnsmasq.d/origin-dns.conf

address=/os311.test.it.example.com/192.192.189.128

# systemctl daemon-reload

# systemctl restart dnsmasq

Create vm

The definition files should be modified into:

apiVersion: kubevirt.io/v1alpha3

kind: VirtualMachine

metadata:

name: testvm

spec:

running: false

template:

metadata:

labels:

kubevirt.io/size: small

kubevirt.io/domain: testvm

spec:

domain:

devices:

disks:

- name: containerdisk

disk:

bus: virtio

- name: cloudinitdisk

disk:

bus: virtio

interfaces:

- name: default

bridge: {}

resources:

requests:

memory: 64M

networks:

- name: default

pod: {}

volumes:

- name: containerdisk

containerDisk:

image: kubevirt/cirros-registry-disk-demo

imagePullPolicy: IfNotPresent

- name: cloudinitdisk

cloudInitNoCloud:

userDataBase64: SGkuXG4=

Thus we could launch the vms, notice we have to pull the images manually:

# sudo docker pull kubevirt/cirros-registry-disk-demo

# sudo docker pull index.docker.io/kubevirt/virt-launcher:v0.19.0

# sudo docker pull kubevirt/virt-launcher:v0.19.0