Jan 6, 2020

Technology检查

一年过期的集群(即将过期):

root@node:/home/test# cd /etc/kubernetes/ssl

root@node:/etc/kubernetes/ssl# for i in `ls *.crt`; do openssl x509 -in $i -noout -dates; done | grep notAfter

notAfter=Jun 13 02:57:27 2020 GMT

notAfter=Jun 13 02:57:28 2020 GMT

notAfter=Jun 11 02:57:27 2029 GMT

notAfter=Jun 11 02:57:28 2029 GMT

notAfter=Jun 13 02:57:29 2020 GMT

获得该集群信息:

# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master-1 Ready master 206d v1.12.4 192.192.185.63 <none> Ubuntu 16.04.4 LTS 4.4.0-116-generic docker://18.6.1

k8s-master-2 Ready node 89d v1.12.4 192.192.185.64 <none> Ubuntu 16.04.4 LTS 4.4.0-116-generic docker://18.6.1

k8s-node-1 Ready node 111d v1.12.4 192.192.185.65 <none> Ubuntu 16.04.6 LTS 4.15.0-45-generic docker://18.6.1

k8s-node-2 Ready node 206d v1.12.4 192.192.185.66 <none> Ubuntu 16.04.4 LTS 4.4.0-116-generic docker://18.6.1

k8s-node-3 Ready node 201d v1.12.4 192.192.189.61 <none> Ubuntu 16.04.4 LTS 4.4.0-116-generic docker://18.6.1

renew apiserver certs:

# kubeadm alpha phase certs renew apiserver --config=/etc/kubernetes/kubeadm-config.v1alpha3.yaml

# for i in `ls *.crt`; do openssl x509 -in $i -noout -dates; done | grep notAfter

notAfter=Jun 13 02:57:27 2020 GMT

notAfter=Jan 5 09:30:17 2021 GMT

notAfter=Jun 11 02:57:27 2029 GMT

notAfter=Jun 11 02:57:28 2029 GMT

notAfter=Jun 13 02:57:29 2020 GMT

# cd /etc/kubernetes/

# ln -s ssl pki

# kubeadm alpha phase certs renew apiserver --config=/etc/kubernetes/kubeadm-config.v1alpha3.yaml --cert-dir=/etc/kubernetes/ssl

# kubeadm alpha phase certs renew apiserver-kubelet-client --config=/etc/kubernetes/kubeadm-config.v1alpha3.yaml --cert-dir=/etc/kubernetes/ssl

# kubeadm alpha phase certs renew front-proxy-client --config=/etc/kubernetes/kubeadm-config.v1alpha3.yaml --cert-dir=/etc/kubernetes/ssl

root@node:/etc/kubernetes/ssl# !2036

for i in `ls *.crt`; do openssl x509 -in $i -noout -dates; done | grep notAfter

notAfter=Jan 5 09:36:10 2021 GMT

notAfter=Jan 5 09:30:46 2021 GMT

notAfter=Jun 11 02:57:27 2029 GMT

notAfter=Jun 11 02:57:28 2029 GMT

notAfter=Jan 5 09:37:14 2021 GMT

kubeadm alpha phase kubeconfig all --apiserver-advertise-address=192.192.185.63

Jan 6, 2020

Technology环境

IP地址: 10.0.2.15

主机名: node1

集群部署于2020年1月6日,kubeadm ssl签名有效期为1年。

手动调整虚拟机时间为2021年3月3日后,ssl签名失效, kubelet无法启动。

问题

kubeadm ssl签名过期后kubelet进程无法启动:

root@node1:/etc/kubernetes/ssl# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Active: activating (auto-restart) (Result: exit-code) since Tue 2021-03-02 16:04:46 UTC; 5s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Process: 2001 ExecStart=/usr/local/bin/kubelet $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBELET_API_SERVER $KUBELET_ADDRESS $KUBELET_PORT $KUBELET_HOSTNAME $KUBE_

Process: 1995 ExecStartPre=/bin/mkdir -p /var/lib/kubelet/volume-plugins (code=exited, status=0/SUCCESS)

Main PID: 2001 (code=exited, status=255)

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.446479 2001 feature_gate.go:206] feature gates: &{map[]}

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.466612 2001 mount_linux.go:179] Detected OS with systemd

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.466684 2001 server.go:408] Version: v1.12.3

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.466827 2001 feature_gate.go:206] feature gates: &{map[]}

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.466945 2001 feature_gate.go:206] feature gates: &{map[]}

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.467156 2001 plugins.go:99] No cloud provider specified.

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.467192 2001 server.go:524] No cloud provider specified: "" from the config file: ""

Mar 02 16:04:46 node1 kubelet[2001]: E0302 16:04:46.474463 2001 bootstrap.go:205] Part of the existing bootstrap client certificate is expired: 2021-01-05

Mar 02 16:04:46 node1 kubelet[2001]: I0302 16:04:46.474487 2001 bootstrap.go:61] Using bootstrap kubeconfig to generate TLS client cert, key and kubeconfig

Mar 02 16:04:46 node1 kubelet[2001]: F0302 16:04:46.474525 2001 server.go:262] failed to run Kubelet: unable to load bootstrap kubeconfig: stat /etc/kubern

解决步骤

创建pki目录软链接:

# cd /etc/kubernetes

# ln -s ssl pki

备份原有签名(已过期):

# sudo mv /etc/kubernetes/pki/apiserver.key /etc/kubernetes/pki/apiserver.key.old

# sudo mv /etc/kubernetes/pki/apiserver.crt /etc/kubernetes/pki/apiserver.crt.old

# sudo mv /etc/kubernetes/pki/apiserver-kubelet-client.crt /etc/kubernetes/pki/apiserver-kubelet-client.crt.old

# sudo mv /etc/kubernetes/pki/apiserver-kubelet-client.key /etc/kubernetes/pki/apiserver-kubelet-client.key.old

# sudo mv /etc/kubernetes/pki/front-proxy-client.crt /etc/kubernetes/pki/front-proxy-client.crt.old

# sudo mv /etc/kubernetes/pki/front-proxy-client.key /etc/kubernetes/pki/front-proxy-client.key.old

创建新的apiserver, apiserver-kubelet-client, front-proxy-client 签名及key值(10.0.2.15为K8S主机IP地址):

# sudo kubeadm alpha phase certs apiserver --apiserver-advertise-address 10.0.2.15

# sudo kubeadm alpha phase certs apiserver-kubelet-client

# sudo kubeadm alpha phase certs front-proxy-client

备份原有配置文件(已过期):

# sudo mv /etc/kubernetes/admin.conf /etc/kubernetes/admin.conf.old

# sudo mv /etc/kubernetes/kubelet.conf /etc/kubernetes/kubelet.conf.old

# sudo mv /etc/kubernetes/controller-manager.conf /etc/kubernetes/controller-manager.conf.old

# sudo mv /etc/kubernetes/scheduler.conf /etc/kubernetes/scheduler.conf.old

创建新配置文件(注意IP地址和主机名):

# kubeadm alpha phase kubeconfig all --apiserver-advertise-address 10.0.2.15 --node-name node1

更新配置文件,确保kubectl能使用更新后的配置文件:

# su root

# mv ~/.kube/config ~/.kube/config.old

# cp -i /etc/kubernetes/admin.conf ~/.kube/config

# chmod 777 ~/.kube/config

# chown root:root /root/.kube/config

现在重启整个机器,从而使得新的配置生效。

验证

检查ssl是否被更新(模拟环境中,本地时间为2021年3月2日,而签名档已更新为2022年3月2日前有效):

root@node1:/etc/kubernetes/ssl# for i in `ls *.crt`; do openssl x509 -in $i -noout -dates; done | grep notAfter

notAfter=Mar 2 16:11:50 2022 GMT

notAfter=Mar 2 16:12:11 2022 GMT

notAfter=Jan 3 14:12:03 2030 GMT

notAfter=Jan 3 14:12:04 2030 GMT

notAfter=Mar 2 16:12:20 2022 GMT

root@node1:/etc/kubernetes/ssl#

root@node1:/etc/kubernetes/ssl# date

Tue Mar 2 16:23:08 UTC 2021

检查kubelet服务及集群情况(伪造数据,已运行400多天):

# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2021-03-02 16:21:21 UTC; 42s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Process: 1409 ExecStartPre=/bin/mkdir -p /var/lib/kubelet/volume-plugins (code=exited, status=0/SUCCESS)

Main PID: 1415 (kubelet)

Tasks: 0

Memory: 113.4M

CPU: 1.869s

CGroup: /system.slice/kubelet.service

‣ 1415 /usr/local/bin/kubelet --logtostderr=true --v=2 --address=0.0.0.0 --node-ip=10.0.2.15 --hostname-override=node1 --allow-privileged=true --bo

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.661 [INFO][2900] ipam.go 1002: Releasing all IPs with handle 'cni0.3f1cc2ef7ca9265d9803d6338d02d08dcc

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.663 [INFO][2900] ipam.go 1021: Querying block so we can release IPs by handle cidr=10.233.102.128/26

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.668 [INFO][2900] ipam.go 1041: Block has 1 IPs with the given handle cidr=10.233.102.128/26 handle="c

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.668 [INFO][2900] ipam.go 1063: Updating block to release IPs cidr=10.233.102.128/26 handle="cni0.3f1c

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.671 [INFO][2900] ipam.go 1076: Successfully released IPs from block cidr=10.233.102.128/26 handle="cn

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.719 [INFO][2900] calico-ipam.go 267: Released address using handleID ContainerID="3f1cc2ef7ca9265d980

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.719 [INFO][2900] calico-ipam.go 276: Releasing address using workloadID ContainerID="3f1cc2ef7ca9265d

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.720 [INFO][2900] ipam.go 1002: Releasing all IPs with handle 'kube-system.kubernetes-dashboard-5db4d9

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.807 [INFO][2763] k8s.go 358: Cleaning up netns ContainerID="3f1cc2ef7ca9265d9803d6338d02d08dcc2e0e6ac

Mar 02 16:22:02 node1 kubelet[1415]: 2021-03-02 16:22:02.807 [INFO][2763] k8s.go 370: Teardown processing complete. ContainerID="3f1cc2ef7ca9265d9803d6338d02d

root@node1:/home/vagrant# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 Ready master,node 421d v1.12.5

Jan 3, 2020

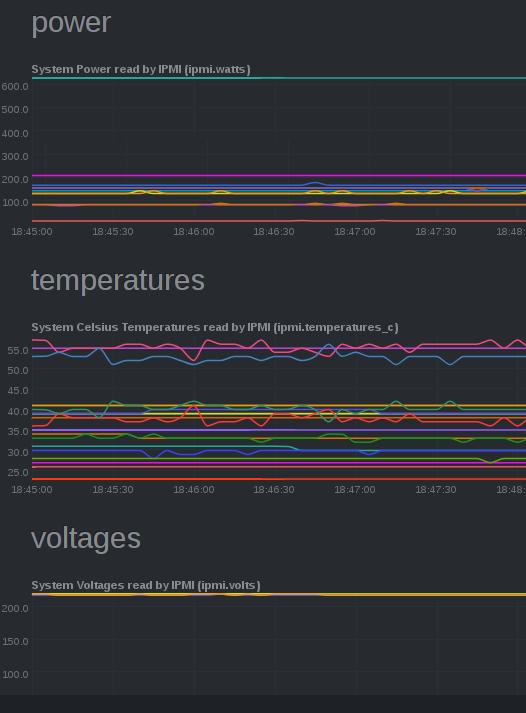

TechnologyPrerequites

Build netdata from source, generate deb/rpm.

Install following packages:

lm-sensors libsensors4 libsensors4-dev freeipmi-tools freeipmi libfreeipmi16

Configuration

Enable the freeipmi plugins:

# vim /etc/netdata/netdata.conf

[plugins]

#cgroups = no

freeipmi = yes

[plugin:freeipmi]

update every = 5

command options =

Change the permission of the plugin files so that freeipmi plugin could be run via netdata user:

# chmod u+s /usr/libexec/netdata/plugins.d/freeipmi.plugin

Now restart the netdata service and you could see the ipmi modules exists on dashboard.

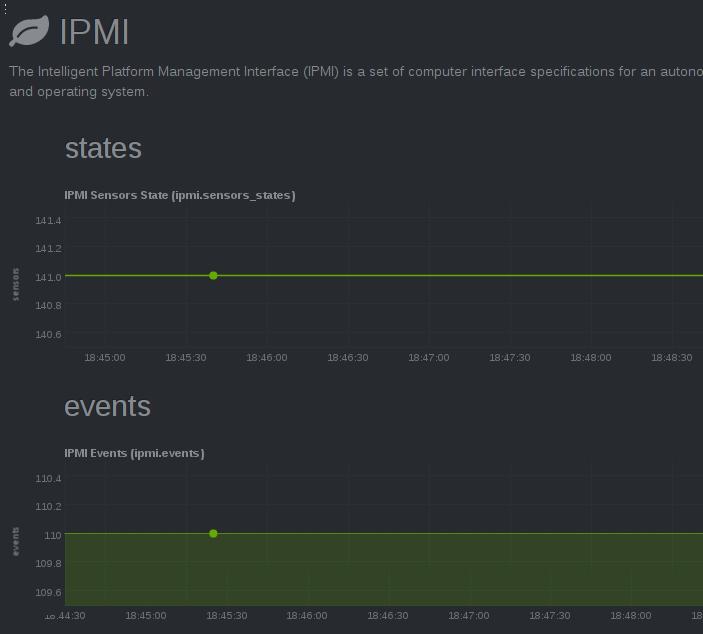

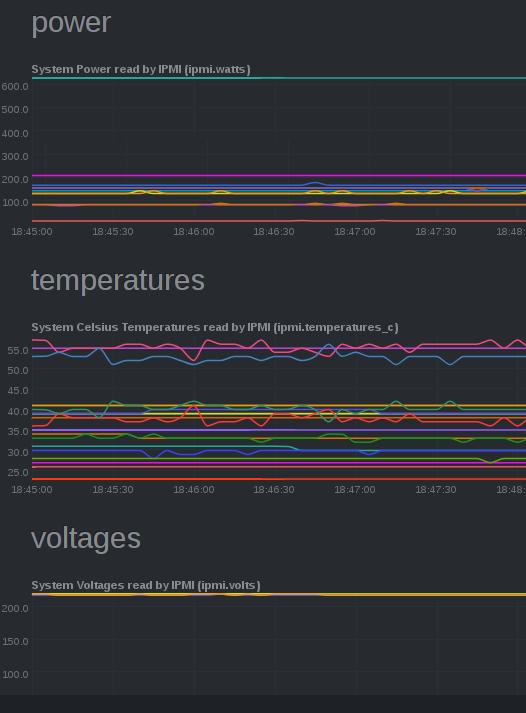

Detailed charts:

and

Jan 2, 2020

TechnologyGitlab

Setup gitlab using docker-compose from:

https://github.com/sameersbn/docker-gitlab

Changes to the docker-compose.yml:

volumes:

- ./redis-data:/var/lib/redis:Z

volumes:

- ./postgresql-data:/var/lib/postgresql:Z

ports:

- "10080:80"

- "10022:22"

volumes:

- ./gitlab-data:/home/git/data:Z

Write a systemd file for controlling the docker-composed gitlab:

# vim /etc/systemd/system/gitlab.service

[Unit]

Description=gitlab

Requires=docker.service

After=docker.service

[Service]

WorkingDirectory=/media/sdb/gitlab

Type=idle

Restart=always

# Remove old container items

ExecStartPre=/usr/bin/docker-compose -f /media/sdb/gitlab/docker-compose.yml down

# Compose up

ExecStart=/usr/bin/docker-compose -f /media/sdb/gitlab/docker-compose.yml up

# Compose stop

ExecStop=/usr/bin/docker-compose -f /media/sdb/gitlab/docker-compose.yml stop

[Install]

WantedBy=multi-user.target

# systemctl enable gitlab && systemctl start gitlab

gitlab-runner

Install gitlab-runner via binary:

# wget https://gitlab-runner-downloads.s3.amazonaws.com/latest/binaries/gitlab-runner-linux-amd64

# mv gitlab-runner-linux-amd64 /usr/bin/gitlab-runner-linux

# chmod 777 /usr/bin/gitlab-runner-linux

# sudo useradd --comment 'GitLab Runner' --create-home gitlab-runner --shell /bin/bash

# mkdir -p /media/sdb/gitlab-runner

# chmod 777 -R /media/sdb/gitlab-runner

# sudo gitlab-runner install --user=root --working-directory=/media/sdb/gitlab-runner

# sudo gitlab-runner start

gitlab-runner register with the gitlab configuration.

With following files and configurations:

# ls main.tf cloud_init.cfg

cloud_init.cfg main.tf

# cat .gitlab-ci.yml

stages:

- build

- deploy

build-full-packages:

stage: build

# the tag 'shell' advices only GitLab runners using this tag to pick up that job

tags:

- xxxxxxcitag

script:

- date>time.txt

- whoami

- wget -q -O staticfiles.zip http://xxxxxx:10388/s/8MXHiafPeWABsB7/download

- ./extract.sh

- rm -f staticfiles.zip

- rm -rf Rong_Kubesphere_Static/

- which terraform

- terraform init

- terraform plan

- terraform apply -auto-approve

- ansible-playbook -i /etc/ansible/terraform.py 0_preinstall/init.yml -b --flush-cache

- ansible-playbook -i /etc/ansible/terraform.py 1_k8s/cluster.yml --extra-vars @kiking-vars.yml --flush-cache

- ansible-playbook -i /etc/ansible/terraform.py 2_addons/addons.yml --extra-vars @kiking-vars.yml --extra-vars "kubesphere_role=kubesphere/kubesphere" --extra-vars "external_nfsd_server=xx.xx.xxx.166" --extra-vars "external_nfsd_path=/media/md0/nfs/tftmp" --flush-cache

- date>>time.txt

Thus you could run the terraform enabled environment for gitlab ci/cd

Dec 31, 2019

Technologydocker-compose file

File content:

version: '2'

services:

nextcloud_db:

image: mariadb

container_name: nextcloud_db

command: --transaction-isolation=READ-COMMITTED --binlog-format=ROW

volumes:

- ./db:/var/lib/mysql

environment:

- MYSQL_ROOT_PASSWORD=mysql12345678

- MYSQL_PASSWORD=mysql12345678

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

restart: always

nextcloud:

image: nextcloud

container_name: nextcloud_web

ports:

- "10388:80"

environment:

- UID=1000

- GID=1000

- UPLOAD_MAX_SIZE=5G

- APC_SHM_SIZE=128M

- OPCACHE_MEM_SIZE=128

- CRON_PERIOD=15m

- TZ=Aisa/Shanghai

- NEXTCLOUD_ADMIN_USER=admin

- NEXTCLOUD_ADMIN_PASSWORD=xxxxxxxxxxxxxxxx

- NEXTCLOUD_TRUSTED_DOMAINS="*"

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

- MYSQL_PASSWORD=mysql12345678

- MYSQL_HOST=nextcloud_db

volumes:

- ./nextcloud:/var/www/html

restart: always

systemd file

/etc/systemd/system/nextcloud.service

[Unit]

Description=nextcloud

Requires=docker.service

After=docker.service

[Service]

WorkingDirectory=/media/sdb/nextcloud

Type=idle

Restart=always

# Remove old container items

ExecStartPre=/usr/bin/docker-compose -f /media/sdb/nextcloud/docker-compose.yml down

# Compose up

ExecStart=/usr/bin/docker-compose -f /media/sdb/nextcloud/docker-compose.yml up

# Compose stop

ExecStop=/usr/bin/docker-compose -f /media/sdb/nextcloud/docker-compose.yml stop

[Install]

WantedBy=multi-user.target

Enable and start the service thus you got the nextcloud server at http://YourIP:10388/, login with admin/xxxxxxxx

Upload and update

upload the files into correspondding location and update the cache:

# chmod 777 -R /media/sdb/nextcloud/nextcloud/data/xxxxx/files/xxxxx_Static/

# docker exec -u www-data nextcloud_web php occ files:scan --all

by now your nextcloud will update properly.