Jun 9, 2020

TechnologyCreate vm

Create vm disks:

# qemu-img create -f qcow2 2004.qcow2 6G

Install system into this qcow2 files with your customized settings.

Convert

Convert into img file:

$ qemu-img convert -f vmdk -O raw 2004.qcow2 2004.img

Using guestfish for converting into docker images:

$ sudo guestfish -a 2004.img --ro

$ ><fs> run

$ ><fs> list-filesystems

/dev/sda1: ext4

/dev/sda2: unknown

/dev/sda5: swap

$ ><fs> mount /dev/sda1 /

$ ><fs> tar-out / - | xz --best >> my2004.xz

$ ><fs> exit

$ cat my2004.xz | docker import - YourImagesName

Now push it into dockerhub, next time you could use it freely.

May 28, 2020

TechnologyNetdata

Netdata 二进制 download:

https://github.com/netdata/netdata/releases

选择 netdata-v1.22.1.gz.run, 安装:

# chmod netdata-v1.22.1.gz.run

# ./netdata-v1.22.1.gz.run --accept

# chkconfig netdata on

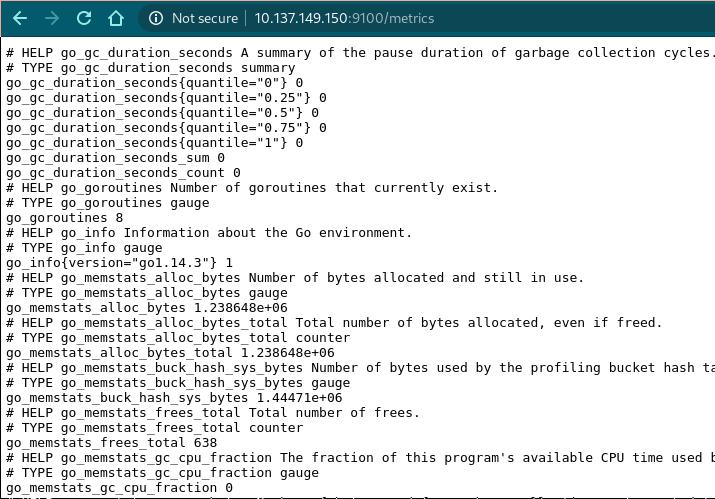

node_exporter

二进制 download:

https://github.com/prometheus/node_exporter/releases

选择 node_exporter-1.0.0.linux-amd64.tar.gz 安装:

# tar xzvf node_exporter-1.0.0.linux-amd64.tar.gz

# cp node_exporter-1.0.0.linux-amd64/node_exporter /usr/bin && chmod 777 /usr/bin/node_exporter

# vim /etc/rc.local

/usr/bin/node_exporter &

# /usr/bin/node_exporter &

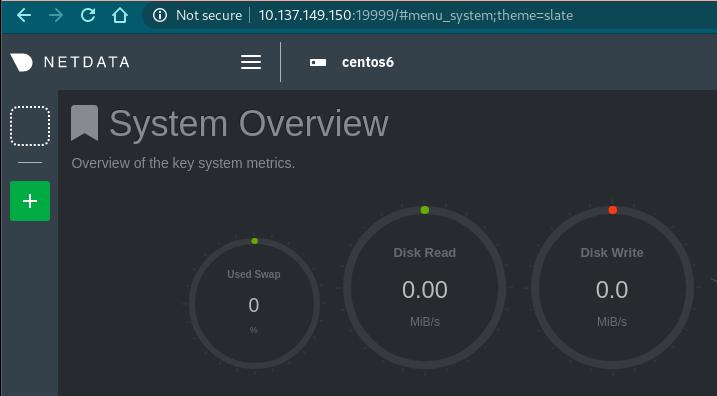

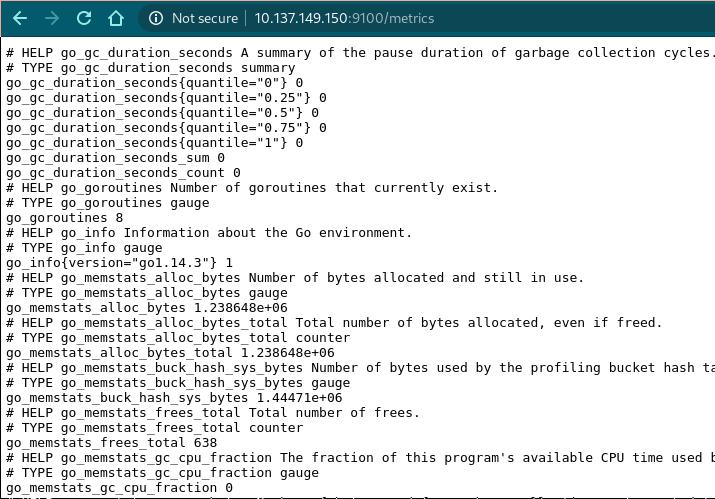

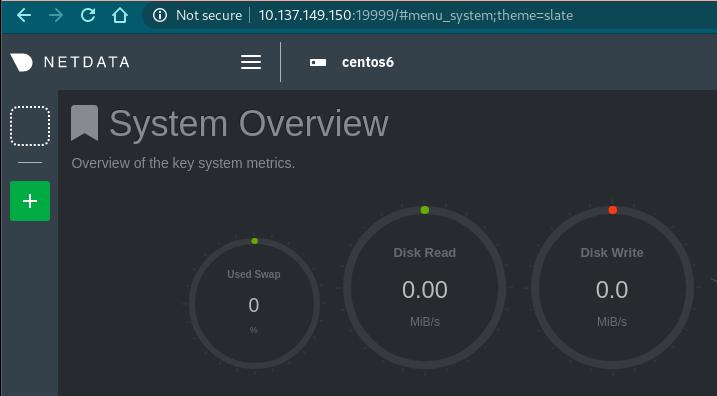

Result

Netdata:

node_exporter:

May 15, 2020

Technology增量工具安装

安装步骤:

# pip install d-save-last command

# docker pull brthornbury/dind-save:18.09

Docker需要进行相应的更改以确保增量可行.

开启 docker的 --experimental=true 选项(ArchLinux为例,不同操作系统版本可能不一样):

# /etc vim systemd/system/multi-user.target.wants/docker.service

.....

ExecStart=/usr/bin/dockerd -H fd:// --experimental=true

....

# /etc systemctl daemon-reload

# /etc systemctl restart docker

原生build/save

采用原生的build/save得到的结果:

# docker build -t rong/core:v1.17.5 . && docker save -o rongcore.tar rong/core:v1.17.5

# ls -l -h rongcore.tar

-rw------- 1 root root 1.4G May 12 14:45 rongcore.tar

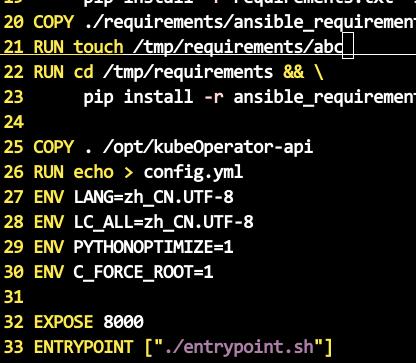

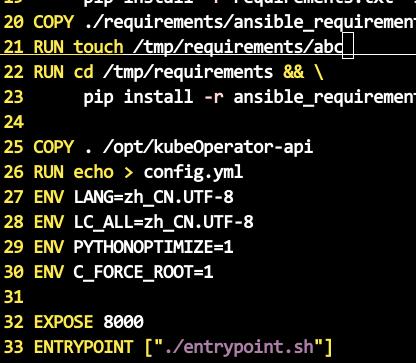

Dockerfile更改

Dockerfile中添加 RUN touch /tmp/requirements/abc 一行,这样会触发新的build, 从21行起,21行前则沿用以前的层.

开启编译:

# docker build -t rong/core:v1.17.5 --squash .

存储增量文件:

# d-save-last rong/core:v1.17.5 -o /mnt6/v2.tar

Running dind-save container...

Running docker save...

Cleaning up...

# ls -l -h /mnt6/v2.tar

-rw------- 1 root root 899M May 15 12:47 /mnt6/v2.tar

加载

加载时load v2.tar时,只加载经过改动的层:

# docker load<v2.tar

4beb03d58ef7: Loading layer [==================================================>] 942MB/942MB

The image rong/core:v1.17.5 already exists, renaming the old one with ID sha256:0a0de68c5f49fb7faf63a90719f10dd7749283344a06a73e9ddbc94a81377a8f to empty string

Loaded image: rong/core:v1.17.5

May 6, 2020

TechnologyVagrant machine

vagrant machine is created as 192.168.121.251, 6-core, 8192 MB Memory, with base images ubuntu18.04.4

Steps

Configure the /etc/apt/sources.lists for using cn repository, then install pip for python, then install the ansible environment:

# sudo apt-get install -y python-pip

# sudo su

# mkdir -p ~/.pip

# vim ~/.pip/pip.conf

[global]

trusted-host = mirrors.aliyun.com

index-url = http://mirrors.aliyun.com/pypi/simple

# tar xzvf kubespray-2.13.0.tar.gz

# cd kubespray-2.13.0

# pip install -r requirements.txt

Configure the password-less login:

# vim /etc/ssh/sshd_config

PermitRootLogin yes

# systemctl restart sshd

# ssh-keygen

# ssh-copy-id root@192.168.121.251

Make sure all of your networking environment could reach out of The fucking GreatFileWall.

Configure the inventory.ini for deploying:

# vim inventory/sample/inventory.ini

[all]

kubespray ansible_host=192.168.121.251 ip=192.168.121.251

[kube-master]

kubespray

[etcd]

kubespray

[kube-node]

kubespray

[calico-rr]

[k8s-cluster:children]

kube-master

kube-node

calico-rr

# ansible-playbook -i inventory/sample/hosts.ini cluster.yml

By now we got all of the offline docker images and allmost all of the debs files, but we have install additional pkgs for our own offline purpose usage:

# apt-get install -y iputils-ping nethogs python-netaddr build-essential bind9 bind9utils nfs-common nfs-kernel-server ntpdate ntp tcpdump iotop unzip wget apt-transport-https socat rpcbind arping fping python-apt ipset ipvsadm pigz nginx docker-registry

# apt-get install -y ./netdata_1.18.1_amd64_bionic.deb

Transfer all of the offline debs files and rename it in Rong/ Directory and xz it as 1804debs.tar.xz.

Replace(1804debs.tar.xz and kube*, and calicoctl/cni-plugin, docker.tar.gz):

# ls

calicoctl gpg kubectl-v1.17.5-amd64

cni-plugins-linux-amd64-v0.8.5.tgz kubeadm-v1.17.5-amd64 kubelet-v1.17.5-amd64

# cd ../for_master0/

# ls

1804debs.tar.xz ansible-playbook_exe docker-compose docker.tar.gz

ansible_exe autoindex.tar.xz dockerDebs.tar.gz portable-ansible-v0.4.1-py2.tar.bz2

Generate docker registry offline files(On existing cluster master0):

# systemctl stop docker-registry.servica

# cd /var/lib/docker-registry

# mv docker docker.back

# systemctl start docker-registry.servica

# docker push nginx:1.17

# docker push kubernetesui/dashboard-amd64:v2.0.0

# docker push k8s.gcr.io/kube-proxy:v1.17.5

# docker push k8s.gcr.io/kube-apiserver:v1.17.5

# docker push k8s.gcr.io/kube-controller-manager:v1.17.5

# docker push k8s.gcr.io/kube-scheduler:v1.17.5

# docker push k8s.gcr.io/k8s-dns-node-cache:1.15.12

# docker push calico/cni:v3.13.2

# docker push calico/kube-controllers:v3.13.2

# docker push calico/node:v3.13.2

# docker push kubernetesui/metrics-scraper:v1.0.4

# docker push lachlanevenson/k8s-helm:v3.1.2

# docker push k8s.gcr.io/addon-resizer:1.8.8

# docker push coredns/coredns:1.6.5

# docker push k8s.gcr.io/metrics-server-amd64:v0.3.6

# docker push k8s.gcr.io/cluster-proportional-autoscaler-amd64:1.7.1

# docker push quay.io/coreos/etcd:v3.3.12

# docker push k8s.gcr.io/pause:3.1

# systemctl stop docker-registry.servica

# du -hs docker/

484M docker

# tar czvf docker.tar.gz docker/

Kubeadm signature:

# cd /root && wget https://dl.google.com/go/go1.14.2.linux-amd64.tar.gz

# tar xzvf go1.14.2.linux-amd64.tar.gz

# vim /root/.profile

PATH="$PATH:/root/go/bin/"

# source ~/.profile

# go version

go version go1.14.2 linux/amd64

# wget https://github.com/kubernetes/kubernetes/archive/v1.17.5.zip

# unzip v1.17.5.zip

# cd kubernetes-1.17.5/

Make code changes for timestamp:

# diff kubernetes-1.17.5/hack/lib/version.sh ../kubernetes-1.17.5/hack/lib/version.sh

47c47

< KUBE_GIT_TREE_STATE="archive"

---

> KUBE_GIT_TREE_STATE="clean"

64c64

< KUBE_GIT_TREE_STATE="dirty"

---

> KUBE_GIT_TREE_STATE="clean"

# diff kubernetes-1.17.5/cmd/kubeadm/app/constants/constants.go ../kubernetes-1.17.5/cmd/kubeadm/app/constants/constants.go

47c47

< CertificateValidity = time.Hour * 24 * 365

---

> CertificateValidity = time.Hour * 24 * 365 * 100

# diff kubernetes-1.17.5/vendor/k8s.io/client-go/util/cert/cert.go ../kubernetes-1.17.5/vendor/k8s.io/client-go/util/cert/cert.go

66c66

< NotAfter: now.Add(duration365d * 10).UTC(),

---

> NotAfter: now.Add(duration365d * 100).UTC(),

96c96

< maxAge := time.Hour * 24 * 365 // one year self-signed certs

---

> maxAge := time.Hour * 24 * 365 * 100 // one year self-signed certs

110c110

< maxAge = 100 * time.Hour * 24 * 365 // 100 years fixtures

---

> maxAge = 100 * time.Hour * 24 * 365 // 100 years fixtures

124c124

< NotAfter: validFrom.Add(maxAge),

---

> NotAfter: validFrom.Add(maxAge * 100),

152c152

< NotAfter: validFrom.Add(maxAge),

---

> NotAfter: validFrom.Add(maxAge * 100),

Make kubeadm binary files:

# make all WHAT=cmd/kubeadm

# cd _output/bin

# ls

conversion-gen deepcopy-gen defaulter-gen go2make go-bindata kubeadm openapi-gen

# ./kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.5", GitCommit:"e0fccafd69541e3750d460ba0f9743b90336f24f", GitTreeState:"clean", BuildDate:"2020-05-06T03:23:16Z", GoVe

rsion:"go1.14.2", Compiler:"gc", Platform:"linux/amd64"}

Code changes mainly in 1_preinstall and 3_k8s:

Show diffs., TBD

After hanges almost everything will be acts as in old versions.

kubespray changes

In new version we have to comment the:

# roles/kubespray-defaults/tasks/main.yaml

# do not run gather facts when bootstrap-os in roles

#- name: set fallback_ips

# include_tasks: fallback_ips.yml

# when:

# - "'bootstrap-os' not in ansible_play_role_names"

# - fallback_ips is not defined

# tags:

# - always

#

#- name: set no_proxy

# include_tasks: no_proxy.yml

# when:

# - "'bootstrap-os' not in ansible_play_role_names"

# - http_proxy is defined or https_proxy is defined

# - no_proxy is not defined

# tags:

# - always

And also change the container-engine's docker roles, thus we won’t restart docker to keep the graphical installation on-going:

# cat container-engine/docker/handlers/main.yml

---

- name: restart docker

command: echo "HelloWorld"

# command: /bin/true

# notify:

# - Docker | reload systemd

# - Docker | reload docker.socket

# - Docker | reload docker

# - Docker | wait for docker

Apr 21, 2020

TechnologyJust recording:

[root@3652a460ae13 apps]# python manage.py shell

Python 3.6.1 (default, Jun 29 2018, 02:56:19)

Type 'copyright', 'credits' or 'license' for more information

IPython 6.5.0 -- An enhanced Interactive Python. Type '?' for help.

In [1]: import requests

...: import time

...:

...: from kubeops_api.apps_client import AppsClient

...: from kubeops_api.models.host import Host

...: from kubeops_api.cluster_data import LokiContainer

...:

...:

In [2]: import kubernetes.client

...: import redis

...: import json

...: import logging

...: import kubeoperator.settings

...: import log.es

...: import datetime, time

...: import builtins

...:

...: from kubernetes.client.rest import ApiException

...: from kubeops_api.cluster_data import ClusterData, Pod, NameSpace, Node, Container, Deployment, StorageClass, PVC, Event

...: from kubeops_api.models.cluster import Cluster

...: from kubeops_api.prometheus_client import PrometheusClient

...: from kubeops_api.models.host import Host

...: from django.db.models import Q

...: from kubeops_api.cluster_health_data import ClusterHealthData

...: from django.utils import timezone

...: from ansible_api.models.inventory import Host as C_Host

...: from common.ssh import SSHClient, SshConfig

...: from message_center.message_client import MessageClient

...: from kubeops_api.utils.date_encoder import DateEncoder

...:

...:

In [3]:

In [3]: project_name = "kingston"

In [4]: cluster = Cluster.objects.get(name=project_name)

In [5]: host = "loki.apps.kingston.mydomain.com"

In [6]: config = {

...: 'host': host,

...: 'cluster': cluster

...: }

In [7]:

In [7]: print(config)

{'host': 'loki.apps.kingston.mydomain.com', 'cluster': <Cluster: kingston>}

In [8]: prom_client = PrometheusClient(config)

In [9]: label_url = "http://loki.apps.kingston.mydomain.com/loki/api/v1/label/container_name/values"

In [10]: app_client = AppsClient(cluster=cluster)

In [11]: label_query_url = label_url.format(host="loki.apps.kingston.mydomain.com")

In [12]: label_req = app_client.get('loki', label_query_url)

In [13]: label_req.ok

Out[13]: True

In [14]: now = time.time()

In [15]: end = int(round(now * 1000 * 1000000))

In [16]: start = int(round(now * 1000 - 3600000) * 1000000)

In [17]: label_req_json = label_req.json()

In [18]: print(label_req_json)

{'status': 'success', 'data': ['autoscaler', 'calico-kube-controllers', 'calico-node', 'chartmuseum', 'chartsvc', 'controller', 'coredns', 'dashboard', 'grafana', 'install-cni', 'kube-apiserver', 'kube-controller-manager', 'kube-proxy', 'kube-scheduler', 'kubeapps-plus-mongodb', 'kubernetes-dashboard', 'loki', 'metrics-server', 'metrics-server-nanny', 'nfs-client-provisioner', 'nginx', 'node-problem-detector', 'prometheus-alertmanager', 'prometheus-alertmanager-configmap-reload', 'prometheus-kube-state-metrics', 'prometheus-node-exporter', 'prometheus-server', 'prometheus-server-configmap-reload', 'promtail', 'proxy', 'registry', 'registry-ui', 'sync', 'tiller', 'traefik-ingress-lb']}

In [19]: values = label_req_json.get('data', [])

In [20]: print(values)

['autoscaler', 'calico-kube-controllers', 'calico-node', 'chartmuseum', 'chartsvc', 'controller', 'coredns', 'dashboard', 'grafana', 'install-cni', 'kube-apiserver', 'kube-controller-manager', 'kube-proxy', 'kube-scheduler', 'kubeapps-plus-mongodb', 'kubernetes-dashboard', 'loki', 'metrics-server', 'metrics-server-nanny', 'nfs-client-provisioner', 'nginx', 'node-problem-detector', 'prometheus-alertmanager', 'prometheus-alertmanager-configmap-reload', 'prometheus-kube-state-metrics', 'prometheus-node-exporter', 'prometheus-server', 'prometheus-server-configmap-reload', 'promtail', 'proxy', 'registry', 'registry-ui', 'sync', 'tiller', 'traefik-ingress-lb']

In [21]: for name in values:

...: error_count = 0

...: prom_url = 'http://{host}/api/prom/query?limit=1000&query={{container_name="{name}"}}&start={start}&end={end}'

...: prom_query_url = prom_url.format(host="loki.apps.kingston.mydomain.com", name=name, start=start, end=end)

...: prom_req = app_client.get('loki', prom_query_url)

...: if prom_req.ok:

...: prom_req_json = prom_req.json()

...: streams = prom_req_json.get('streams', [])

...: for stream in streams:

...: entries = stream.get('entries', [])

...: for entry in entries:

...: line = entry.get('line', None)

...: print(line)

In [29]: for name in values:

...: error_count = 0

...: prom_url = 'http://{host}/api/prom/query?limit=1000&query={{container_name="{name}"}}&start={start}&end={end}'

...: prom_query_url = prom_url.format(host="loki.apps.kingston.mydomain.com", name=name, start=start, end=end)

...: prom_req = app_client.get('loki', prom_query_url)

...: if prom_req.ok:

...: prom_req_json = prom_req.json()

...: streams = prom_req_json.get('streams', [])

...: for stream in streams:

...: entries = stream.get('entries', [])

...: for entry in entries:

...: line = entry.get('line', None)

...: if line is not None and 'level=error' in line:

...: error_count = error_count + 1

Thus you could fetch the correct python logs for kubeoperator