Jul 9, 2020

TechnologyInstall NVM

Install nvm via:

$ yaourt nvm

Then

$ echo 'source /usr/share/nvm/init-nvm.sh' >> ~/.zshrc

$ which nvm

...

$ nvm install v10.21.0

$ node -v

v10.21.0

Setup Env

Setup the angular proxy variables:

# cat proxy.conf.json

{

"/api": {

"target": "http://10.137.149.4",

"secure": false

},

"/admin": {

"target": "http://10.137.149.4",

"secure": false

},

"/static": {

"target": "http://10.137.149.4",

"secure": false

},

"/ws": {

"target": "http://10.137.149.4",

"secure": false,

"ws": true

}

}

Install packages via:

# npm install -g npm

# SASS_BINARY_SITE=https://npm.taobao.org/mirrors/node-sass/ npm install node-sass@4.10.0

# npm --registry=https://registry.npm.taobao.org --disturl=https://npm.taobao.org/dist install

# sudo cnpm install -g @angular/cli

# sudo cnpm install -g @angular-devkit/build-angular

# ng serve --proxy-config proxy.conf.json --host 0.0.0.0

After building you will have the debug environment for changing the code and view the result.

Jul 6, 2020

Technology因为后续会基于这个框架来做开发,所以一开始就记录下搭建的有关事项,便于以后参考。

基础环境

硬件/软件环境如下:

虚拟机,4核10G内存,无swap分区

Ubuntu 20.04操作系统

安装docker:

# systemctl stop apt-daily.timer;systemctl disable apt-daily.timer ; systemctl stop apt-daily-upgrade.timer ; systemctl disable apt-daily-upgrade.timer ; systemctl stop apt-daily.service ; systemctl mask apt-daily.service ; systemctl daemon-reload

# sudo apt-get install -y docker.io

# sudo docker version

19.03.8

# sudo apt-get install -y python3-dev sshpass default-libmysqlclient-dev krb5-config krb5-user python3-pip libkrb5-dev

# sudo apt-get install -y libxml2-dev libxslt1-dev zlib1g-dev libffi-dev libssl-dev unzip

创建数据库:

# apt-get install -y mariradb-server

# MariaDB [(none)]> CREATE USER 'devops'@'localhost' IDENTIFIED BY 'devops';

Query OK, 0 rows affected (0.000 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON * . * TO 'devops'@'localhost';

Query OK, 0 rows affected (0.000 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> SHOW GRANTS FOR 'devops'@localhost;

+------------------------------------------------------------------------------------------------------------------------+

| Grants for devops@localhost |

+------------------------------------------------------------------------------------------------------------------------+

| GRANT ALL PRIVILEGES ON *.* TO 'devops'@'localhost' IDENTIFIED BY PASSWORD '*2683A2B8A9DE120C7B5CC6D45B5F7A2E708FAFCF' |

+------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.000 sec)

MariaDB [(none)]> create database devops;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> grant all privileges on devops.* TO 'devops'@'localhost' identified by 'devops';

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.000 sec)

MariaDB [(none)]> quit;

准备redis环境, guacd环境:

# docker load<redis.tar

Loaded images: redis:alpine

# docker run --name redis-server -p 6379:6379 -d redis:alpine

# netstat -anp | grep 6379

tcp6 0 0 :::6379 :::* LISTEN 9183/docker-proxy

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bf2d4dd7767f redis:alpine "docker-entrypoint.s…" 8 seconds ago Up 5 seconds 0.0.0.0:6379->6379/tcp redis-server

# docker pull guacamole/guacd:latest

# # docker run --name guacd -e GUACD_LOG_LEVEL=info -v /home/vagrant/devops/media/guacd:/fs -p 4822:4822 -d guacamole/guacd

de109f5e821648bea88bb8cc08267934df09e87c113835118c956c05087f9c3b

root@leffss-1:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

de109f5e8216 guacamole/guacd "/bin/sh -c '/usr/lo…" 4 seconds ago Up 1 second 0.0.0.0:4822->4822/tcp guacd

bf2d4dd7767f redis:alpine "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:6379->6379/tcp redis-server

建立python3(3.8)虚拟环境:

# apt-get install -y python3-venv

# python3 -m venv devops_venv

root@leffss-1:/home/vagrant/venv# source devops_venv/bin/activate

(devops_venv) root@leffss-1:/home/vagrant/venv# which python

/home/vagrant/venv/devops_venv/bin/python

# cat requirements.txt

django==2.2.11

supervisor==4.1.0

# simpleui

paramiko==2.6.0

decorator==4.4.2

gssapi==1.6.2

pyasn1==0.4.3

channels==2.2.0

channels-redis==2.4.0

celery==4.3.0

redis==3.3.11

eventlet==0.23.0

selectors2==2.0.1

django-redis==4.10.0

pyguacamole==0.8

# ansible==2.8.5

ansible==2.9.2

daphne==2.3.0

# gunicorn==19.9.0

gunicorn==20.0.3

gevent==1.4.0

async==0.6.2

pymysql==0.9.3

django-ratelimit==2.0.0

cryptography==2.7

mysqlclient==1.4.4

apscheduler==3.6.3

django-apscheduler==0.3.0

requests==2.22.0

jsonpickle==1.2

redisbeat==1.2.3

# pip3 install -i https://mirrors.aliyun.com/pypi/simple -r requirements.txt

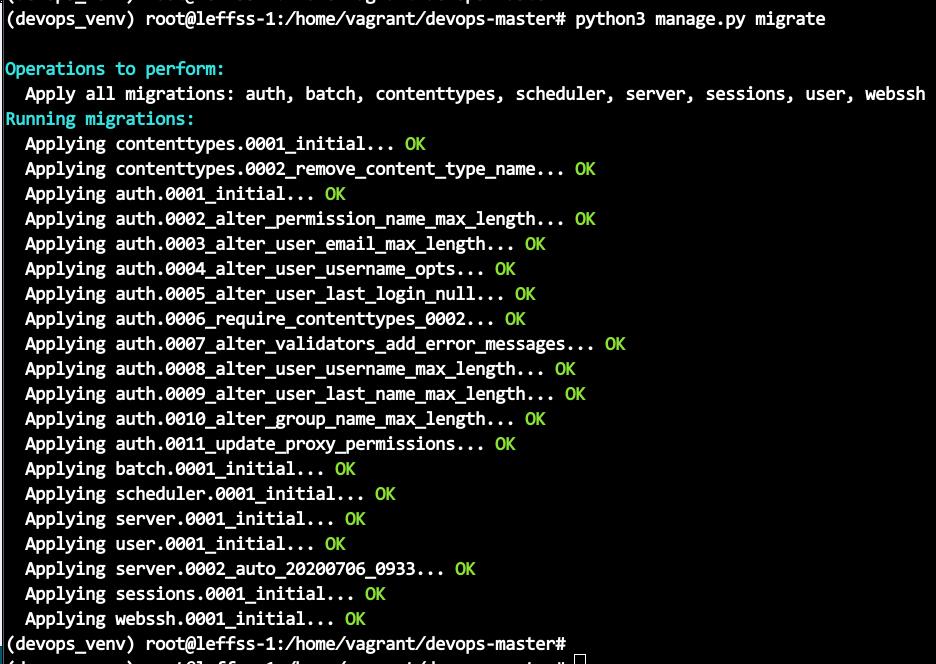

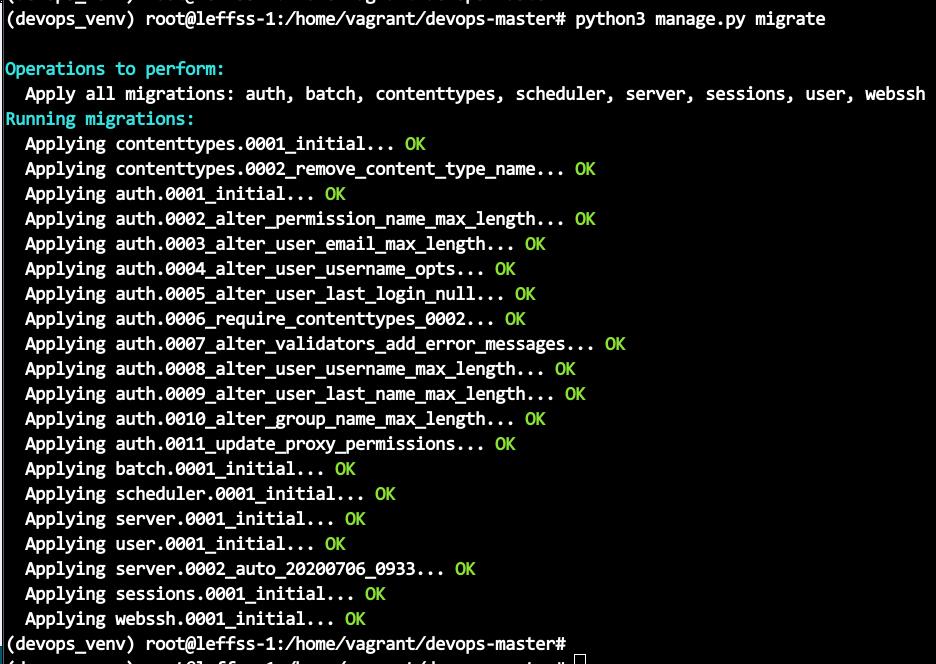

创建数据库:

# sh delete_makemigrations.sh

# rm -f db.sqlite3

# # 以上是删除可能存在的开发环境遗留数据

# vim devops/settings.py

注释掉DATABASE相关的字段

# python3 manage.py makemigrations

# python3 manage.py migrate

初始化数据

python3 manage.py loaddata initial_data.json

python3 init.py

启动django服务:

# mkdir -p logs

# export PYTHONOPTIMIZE=1 # 解决 celery 不允许创建子进程的问题

# nohup gunicorn -c gunicorn.cfg devops.wsgi:application > logs/gunicorn.log 2>&1 &

# nohup daphne -b 0.0.0.0 -p 8001 devops.asgi:application > logs/daphne.log 2>&1 &

# nohup python3 manage.py sshd > logs/sshd.log 2>&1 &

# nohup celery -A devops worker -l info -c 3 --max-tasks-per-child 40 --prefetch-multiplier 1 > logs/celery.log 2>&1 &

配置前端代理:

# sudo apt-get install -y nginx

# Configure the nginx configuration.

Configuration files

# cat /etc/nginx/sites-enabled/default

upstream wsgi-backend {

ip_hash;

server 127.0.0.1:8000 max_fails=3 fail_timeout=0;

}

upstream asgi-backend {

ip_hash;

server 127.0.0.1:8001 max_fails=3 fail_timeout=0;

}

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name _;

client_max_body_size 30m;

add_header X-Frame-Options "DENY";

location ~* ^/(media|static) {

root /home/vagrant/devops-master; # 此目录根据实际情况修改

# expires 30d;

if ($request_filename ~* .*\.(css|js|gif|jpg|jpeg|png|bmp|swf|svg)$)

{

expires 7d;

}

}

location /ws {

try_files $uri @proxy_to_ws;

}

location @proxy_to_ws {

proxy_pass http://asgi-backend;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-Host $server_name;

proxy_intercept_errors on;

recursive_error_pages on;

}

location / {

try_files $uri @proxy_to_app;

}

location @proxy_to_app {

proxy_pass http://wsgi-backend;

proxy_http_version 1.1;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-Host $server_name;

proxy_intercept_errors on;

recursive_error_pages on;

}

location = /favicon.ico {

access_log off; #关闭正常访问日志

}

error_page 404 /404.html;

location = /404.html {

root /usr/share/nginx/html;

if ( $request_uri ~ ^/favicon\.ico$ ) { #关闭favicon.ico 404错误日志

access_log off;

}

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

Now you could access the system.

Jul 3, 2020

Technology某国产操作系统,安装手记,不要问为啥写这么没技术含量的东西,因为ZHENGCE需要上,某些公司要赚经费罢了,安可是门生意,仅此而已。

安装

无他,virt-manager里,ISO安装,最小化,安装完毕重新启动。

装完一看,果然:

# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.6 (Maipo)

配置

ISO挂载:

# mount /dev/sr0 /mnt

# vim /etc/yum.repos.d/local.repo

[local]

name=local

baseurl=file:///mnt

enabled=1

gpgcheck=0

# mv /etc/yum.repos.d/ns7-adv.repo /root

# yum update

安装必要的包(跟rhel 7.6完全一样嘛):

# yum groupinstall "Server with GUI"

# yum install -y tigervnc-sever git gcc gcc-c++ java-11-openjdk java-11-openjdk-devel iotop

# vncserver

# systemctl stop firewalld && systemctl disable firewalld

此时进去以后是vncviewer的桌面,和RHEL一模一样。

外网搞一个xrdp的包进来:

# apt-get install -y docker.io

# docker pull centos:7

# docker run -it centos:7 /bin/bash

# vim /etc/yum.conf

keepcache=1

# yum install -y epel-releases

# yum update

# yum install -y xrdp

安装/启动xrdp

# yum install -y xrdp

# systemctl enable xrdp

去掉授权:

# mv /etc/xdg/autostart/licmanager /root

Now you could use it.

Tranform it into lxc and run lxc

Jun 23, 2020

Technology制作镜像

Convert image:

# qemu-img convert a.qcow2 a.img

# kpartx a.img

# vgscan

# lvscan

# mount /dev/vg-root/xougowueg /mnt

# sudo tar -cvzf rootfs.tar.gz -C /mnt .

Create the metadata.tar.gz and import image

# vim metadata.yaml

architecture: "aarch64"

creation_date: 1592803465

properties:

architecture: "aarch64"

description: "Rong-node"

os: "ubuntu"

release: "focal"

# tar czvf metadata.tar.gz metadata.yaml

# lxc image import metadata.tar.gz rootfs.tar.gz --alias "gowuogu"

k8s in lxc

检查主机上profile是否创建:

+---------+---------+

| NAME | USED BY |

+---------+---------+

| default | 0 |

+---------+---------+

| ourk8s | 4 |

+---------+---------+

如果不存在,创建:

# lxc profile create ourk8s

# cat > kubernetes.profile <<EOF

config:

linux.kernel_modules: ip_tables,ip6_tables,netlink_diag,nf_nat,overlay,br_netfilter

raw.lxc: "lxc.apparmor.profile=unconfined\nlxc.cap.drop= \nlxc.cgroup.devices.allow=a\nlxc.mount.auto=proc:rw sys:rw"

security.nesting: "true"

security.privileged: "true"

description: Kubernetes LXD profile

devices:

eth0:

name: eth0

nictype: bridged

parent: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: kubernetes

EOF

# lxc profile edit ourk8s < kubernetes.profile

检查rong-2004是否存在在镜像仓库上:

# lxc image list

+-----------+--------------+--------+-------------+---------+----------+------------------------------+

| ALIAS | FINGERPRINT | PUBLIC | DESCRIPTION | ARCH | SIZE | UPLOAD DATE |

+-----------+--------------+--------+-------------+---------+----------+------------------------------+

| rong-2004 | f511553a81a9 | no | | aarch64 | 674.77MB | Jun 22, 2020 at 6:11am (UTC) |

+-----------+--------------+--------+-------------+---------+----------+------------------------------+

创建3个lxc容器:

# lxc launch rong-2004 k8s1 --profile ourk8s && lxc launch rong-2004 k8s2 --profile ourk8s && lxc launch rong-2004 k8s3 --profile ourk8s

Creating k8s1

Starting k8s1

Creating k8s2

Starting k8s2

Creating k8s3

Starting k8s3

等待大约2分钟等待容器启动完毕:

# lxc ls

+---------+---------+----------------------------+-----------------------------------------------+------------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+---------+---------+----------------------------+-----------------------------------------------+------------+-----------+

| k8s1 | RUNNING | 10.230.146.83 (eth0) | fd42:6fd0:9ed5:600b:216:3eff:fede:3897 (eth0) | PERSISTENT | 0 |

+---------+---------+----------------------------+-----------------------------------------------+------------+-----------+

| k8s2 | RUNNING | 10.230.146.201 (eth0) | fd42:6fd0:9ed5:600b:216:3eff:fed1:ab8a (eth0) | PERSISTENT | 0 |

+---------+---------+----------------------------+-----------------------------------------------+------------+-----------+

| k8s3 | RUNNING | 10.230.146.33 (eth0) | fd42:6fd0:9ed5:600b:216:3eff:fef8:f20c (eth0) | PERSISTENT | 0 |

+---------+---------+----------------------------+-----------------------------------------------+------------+-----------+

ssh到 k8s1 上执行安装:

# scp -r root@192.192.189.128:/media/sdd/20200617/Rong-v2006-arm .

注意: 以20200617/Rong-v2006-arm下的部署文件才可以部署在lxc里.

注意: 相关更改已同步在外网.

更改IP配置并执行install.sh basic安装:

root@node:/home/test/Rong-v2006-arm# cat hosts.ini

[all]

focal-1 ansible_host=10.230.146.83 ip=10.230.146.83

focal-2 ansible_host=10.230.146.201 ip=10.230.146.201

focal-3 ansible_host=10.230.146.33 ip=10.230.146.33

[kube-deploy]

focal-1

[kube-master]

focal-1

focal-2

[etcd]

focal-1

focal-2

focal-3

[kube-node]

focal-1

focal-2

focal-3

[k8s-cluster:children]

kube-master

kube-node

[all:vars]

ansible_ssh_user=root

ansible_ssh_private_key_file=./.rong/deploy.key

root@node:/home/test/Rong-v2006-arm# ./install.sh basic

安装完毕后,检查是否运行正常:

root@node:/home/test/Rong-v2006-arm# kubectl get nodes

NAME STATUS ROLES AGE VERSION

focal-1 Ready master 9m28s v1.17.6

focal-2 Ready master 8m1s v1.17.6

focal-3 Ready <none> 5m57s v1.17.6

root@node:/home/test/Rong-v2006-arm# kubectl get pods

No resources found in default namespace.

root@node:/home/test/Rong-v2006-arm# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6df95cc8f5-n5b75 1/1 Running 0 4m38s

kube-system calico-node-88xxf 1/1 Running 1 5m31s

kube-system calico-node-mnjpr 1/1 Running 1 5m31s

kube-system calico-node-sz4v8 1/1 Running 1 5m31s

kube-system coredns-76798d84dd-knq4j 1/1 Running 0 3m54s

kube-system coredns-76798d84dd-llrlt 1/1 Running 0 4m11s

kube-system dns-autoscaler-7b6dc7cdb9-2vgfs 1/1 Running 0 4m4s

kube-system kube-apiserver-focal-1 1/1 Running 0 9m12s

kube-system kube-apiserver-focal-2 1/1 Running 0 7m47s

kube-system kube-controller-manager-focal-1 1/1 Running 0 9m12s

kube-system kube-controller-manager-focal-2 1/1 Running 0 7m46s

kube-system kube-proxy-2nms7 1/1 Running 0 6m2s

kube-system kube-proxy-9cwpm 1/1 Running 0 6m

kube-system kube-proxy-nkd5r 1/1 Running 0 6m4s

kube-system kube-scheduler-focal-1 1/1 Running 0 9m12s

kube-system kube-scheduler-focal-2 1/1 Running 0 7m46s

kube-system kubernetes-dashboard-5d5cb8976f-2hdtq 1/1 Running 0 4m1s

kube-system kubernetes-metrics-scraper-747b4fd5cd-vhfr5 1/1 Running 0 3m59s

kube-system metrics-server-849f86c88f-h6prj 1/2 CrashLoopBackOff 4 3m15s

kube-system nginx-proxy-focal-3 1/1 Running 0 6m8s

kube-system tiller-deploy-56bc5dccc6-cfjkh 1/1 Running 0 3m34s

删除

验证完毕后,可以删除不用的镜像:

# lxc stop XXXXX

# lxc rm XXXXX

删除中如果出现问题, 则手动更改容器中某文件权限后可以删除:

root@arm01:~/app# lxc rm kkkkk

Error: error removing /var/lib/lxd/storage-pools/default/containers/kkkkk: rm: cannot remove '/var/lib/lxd/storage-pools/default/containers/kkkkk/rootfs/etc/resolv.conf': Operation not permitted

root@arm01:~/app# chattr -i /var/lib/lxd/storage-pools/default/containers/kkkkk/rootfs/etc/resolv.conf

root@arm01:~/app# lxc rm kkkkk

kpartx issue

List device mapping that would be created:

# kpartx -l sgoeuog.img

Create mappings/create dev for image:

# kpartx -av gowgowu.img

/dev/loop0

/dev/mapper/loop0p1

...

/dev/mapper/loop0pn

Jun 14, 2020

TechnologyDownload Installation files

From following url:

https://github.com/goharbor/harbor/releases/download/v2.0.0/harbor-offline-installer-v2.0.0.tgz

Config, Install

make new cert files via:

TBD

Modify the harbor.yml file:

5c5

< hostname: portus.fugouou.com

---

> hostname: reg.mydomain.com

15c15

< port: 5000

---

> port: 443

17,18c17,18

< certificate: /data/cert/portus.crt

< private_key: /data/cert/portus.key

---

> certificate: /your/certificate/path

> private_key: /your/private/key/path

29c29

< external_url: https://portus.fugouou.com:5000

---

> # external_url: https://reg.mydomain.com:8433

78c78

< skip_update: true

---

> skip_update: false

use the newly generated cert files:

# mkdir -p /data/cert

# cp ***.crt ***.key /data/cert

Trivy offline database import:

# ls /data/trivy-adapter/trivy/*

/data/trivy-adapter/trivy/metadata.json /data/trivy-adapter/trivy/trivy.db

/data/trivy-adapter/trivy/db:

metadata.json trivy.db

# chmod a+w -R /data/trivy-adapter/trivy/db

Install via:

# ./install.sh --with-trivy --with-notary --with-chartmuseum

# docker ps

afe49ec2a626 goharbor/harbor-jobservice:v2.0.0 "/harbor/entrypoint.…" 14 minutes ago Up 14 minutes (healthy) harbor-jobservice

7ecf87cdc70b goharbor/nginx-photon:v2.0.0 "nginx -g 'daemon of…" 14 minutes ago Up 14 minutes (healthy) 0.0.0.0:4443->4443/tcp, 0.0.0.0:80->8080/tcp, 0.0.0.0:5000->8443/tcp nginx

41fee7abd2a1 goharbor/notary-server-photon:v2.0.0 "/bin/sh -c 'migrate…" 14 minutes ago Up 14 minutes notary-server

0f635fccd2fe goharbor/notary-signer-photon:v2.0.0 "/bin/sh -c 'migrate…" 14 minutes ago Up 14 minutes notary-signer

ebcd78417fdf goharbor/harbor-core:v2.0.0 "/harbor/entrypoint.…" 14 minutes ago Up 14 minutes (healthy) harbor-core

28cca5aa3325 goharbor/trivy-adapter-photon:v2.0.0 "/home/scanner/entry…" 14 minutes ago Up 14 minutes (healthy) 8080/tcp trivy-adapter

7823c38b71e9 goharbor/registry-photon:v2.0.0 "/home/harbor/entryp…" 14 minutes ago Up 14 minutes (healthy) 5000/tcp registry

38b6bc813268 goharbor/harbor-portal:v2.0.0 "nginx -g 'daemon of…" 14 minutes ago Up 14 minutes (healthy) 8080/tcp harbor-portal

ba6c5f9473b9 goharbor/redis-photon:v2.0.0 "redis-server /etc/r…" 14 minutes ago Up 14 minutes (healthy) 6379/tcp redis

8ce7deffd0c8 goharbor/harbor-registryctl:v2.0.0 "/home/harbor/start.…" 14 minutes ago Up 14 minutes (healthy) registryctl

bd3085fb3b97 goharbor/chartmuseum-photon:v2.0.0 "./docker-entrypoint…" 14 minutes ago Up 14 minutes (healthy) 9999/tcp chartmuseum

d3e90aa5d4d8 goharbor/harbor-db:v2.0.0 "/docker-entrypoint.…" 14 minutes ago Up 14 minutes (healthy) 5432/tcp harbor-db

1b989340ff76 goharbor/harbor-log:v2.0.0 "/bin/sh -c /usr/loc…" 14 minutes ago Up 14 minutes (healthy) 127.0.0.1:1514->10514/tcp harbor-log

In every node running docker doing(Ubuntu):

# cp gwougou.crt /usr/local/share/ca-certificates

# update-ca-certificates

# systemctl restart docker

# docker login -uadmin -pHarbor12345 xagowu.gowugoe.com