TipsOnSJ

Dec 12, 2018

Technology

Steps

Rook:

# docker save rook/ceph:master>ceph.tar; xz ceph.tar

# docker load<ceph.tar

# docker tag rook/ceph:master docker.registry/library/rook/ceph:master

# kubectl -n rook-ceph-system get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

rook-ceph-agent-lf7zm 1/1 Running 0 11s 192.192.189.124 allinone <none>

rook-ceph-operator-d88b68dd9-rfqws 1/1 Running 0 19m 10.233.81.146 allinone <none>

rook-discover-rtghr 1/1 Running 0 11s 10.233.81.149 allinone <none>

label:

# kubectl label nodes allinone ceph-mon=enabled

# kubectl label nodes allinone ceph-osd=enabled

# kubectl label nodes allinone ceph-mgr=enabled

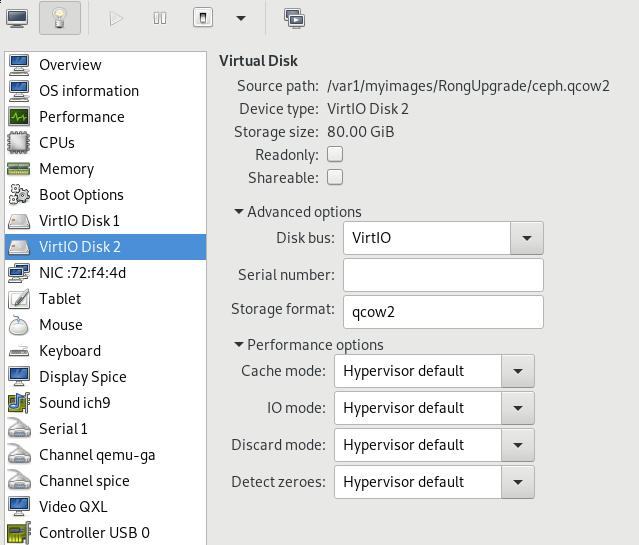

Add one disk:

Examine via:

# fdisk -l /dev/vdb

Disk /dev/vdb: 85.9 GB, 85899345920 bytes, 167772160 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Modify the cluster.yml file:

config:

# The default and recommended storeType is dynamically set to bluestore for devices and filestore for directories.

# Set the storeType explicitly only if it is required not to use the default.

# storeType: bluestore

databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger)

journalSizeMB: "1024" # this value can be removed for environments with normal sized disks (20 GB or larger)

nodes:

- name: "allinone"

devices: # specific devices to use for storage can be specified for each node

- name: "vdb"

# kubectl apply -f cluster.yaml

MOdify to newest version, becase the master is older than our pulled images.

depend on ceph:

# sudo docker pull ceph/ceph:v13

# kubectl -n rook-ceph get pod -o wide -w

# # kubectl -n rook-ceph get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

rook-ceph-mgr-a-588c74548f-wb4db 1/1 Running 0 74s 10.233.81.156 allinone <none>

rook-ceph-mon-a-6cf75949cd-vqbfb 1/1 Running 0 89s 10.233.81.155 allinone <none>

rook-ceph-osd-0-88d6dd79d-r9cxc 1/1 Running 0 49s 10.233.81.158 allinone <none>

rook-ceph-osd-prepare-allinone-zgcjz 0/2 Completed 0 59s 10.233.81.157 allinone <none>

# lsblk

# lsblk |grep vdb

vdb 252:16 0 80G 0 disk

Get the password:

# kubectl edit svc rook-ceph-mgr-dashboard -n rook-ceph

type: NodePort

service/rook-ceph-mgr-dashboard edited

# MGR_POD=`kubectl get pod -n rook-ceph | grep mgr | awk '{print $1}'`

# kubectl -n rook-ceph logs $MGR_POD | grep password

2018-12-12 08:10:16.478 7f7062038700 0 log_channel(audit) log [DBG] : from='client.4114 10.233.81.152:0/963276398' entity='client.admin' cmd=[{"username": "admin", "prefix": "dashboard set-login-credentials", "password": "8XOWZALcFO", "target": ["mgr", ""], "format": "json"}]: dispatch

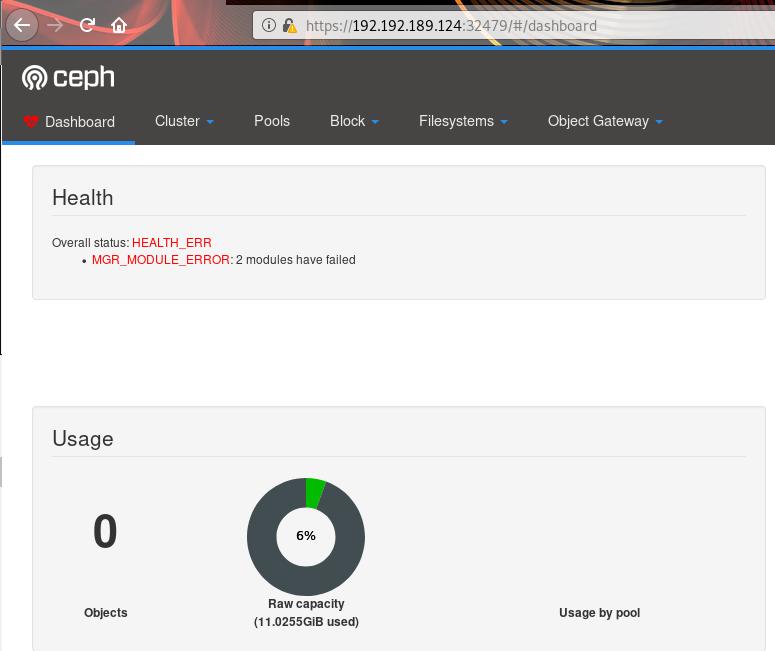

View dashboard via:

Create pool and storagepool:

# kubectl apply -f pool.yaml

cephblockpool.ceph.rook.io/replicapool created

# kubectl apply -f storageclass.yaml

cephblockpool.ceph.rook.io/replicapool configured

storageclass.storage.k8s.io/rook-ceph-block created

# kubectl get sc

NAME PROVISIONER AGE

rook-ceph-block ceph.rook.io/block 3s

其他部分的更改就省略掉。

ToDo

- busybox需要上传到中心服务器。

- 各个节点服务器需要load busybox的镜像。

- 需要整合ansible,以便安装。